Jupyter Notebook

Library Import

import pandas as pd

import numpy as np

import os

import sys

sys.path.insert(0, './../MOGDx/MAIN/')

from utils import *

from GNN_MME import *

from train import *

import preprocess_functions

import matplotlib.pyplot as plt

from sklearn.model_selection import StratifiedKFold , train_test_split

import networkx as nx

import torch

from datetime import datetime

import joblib

import warnings

import gc

warnings.filterwarnings("ignore")

print("Finished Library Import \n")

Finished Library Import

Specify Arguments

data_input = './../../../data/raw'

snf_net = 'RPPA_mRNA_graph.graphml'

index_col = 'index'

target = 'paper_BRCA_Subtype_PAM50'

R_workflow = False

Model Training

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')# Get GPU device name, else use CPU

print("Using %s device" % device)

get_gpu_memory()

datModalities , meta = data_parsing(data_input , ['RPPA', 'mRNA'] , target , index_col)

graph_file = data_input + '/../Networks/' + snf_net

g = nx.read_graphml(graph_file)

meta = meta.loc[sorted(meta.index)]

label = F.one_hot(torch.Tensor(list(meta.astype('category').cat.codes)).to(torch.int64))

skf = StratifiedKFold(n_splits=3 , shuffle=True)

print(skf)

MME_input_shapes = [ datModalities[mod].shape[1] for mod in datModalities]

h = reduce(merge_dfs , list(datModalities.values()))

h = h.loc[sorted(h.index)]

g = dgl.from_networkx(g , node_attrs=['idx' , 'label'])

g.ndata['feat'] = torch.Tensor(h.to_numpy())

g.ndata['label'] = label

del datModalities

gc.collect()

output_metrics = []

test_logits = []

test_labels = []

for i, (train_index, test_index) in enumerate(skf.split(meta.index, meta)) :

model = GCN_MME(MME_input_shapes , [16 , 16] , 64 , [32], len(meta.unique())).to(device)

print(model)

print(g)

g = g.to(device)

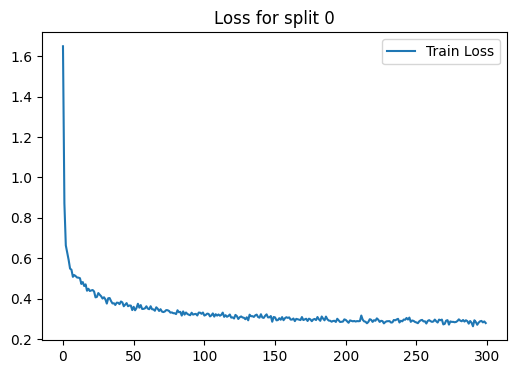

loss_plot = train(g, train_index, device , model , label , 300 , 1e-3 , 100)

plt.title(f'Loss for split {i}')

plt.show()

plt.clf()

sampler = NeighborSampler(

[15 for i in range(len(model.gnnlayers))], # fanout for each layer

prefetch_node_feats=['feat'],

prefetch_labels=['label'],

)

test_dataloader = DataLoader(

g,

torch.Tensor(test_index).to(torch.int64).to(device),

sampler,

device=device,

batch_size=1024,

shuffle=True,

drop_last=False,

num_workers=0,

use_uva=False,

)

test_output_metrics = evaluate(model , g, test_dataloader)

print(

"Fold : {:01d} | Test Accuracy = {:.4f} | F1 = {:.4f} ".format(

i+1 , test_output_metrics[1] , test_output_metrics[2] )

)

test_logits.extend(test_output_metrics[-2])

test_labels.extend(test_output_metrics[-1])

output_metrics.append(test_output_metrics)

if i == 0 :

best_model = model

best_idx = i

elif output_metrics[best_idx][1] < test_output_metrics[1] :

best_model = model

best_idx = i

get_gpu_memory()

del model

gc.collect()

torch.cuda.empty_cache()

print('Clearing gpu memory')

get_gpu_memory()

test_logits = torch.stack(test_logits)

test_labels = torch.stack(test_labels)

accuracy = []

F1 = []

i = 0

for metric in output_metrics :

accuracy.append(metric[1])

F1.append(metric[2])

print("%i Fold Cross Validation Accuracy = %2.2f \u00B1 %2.2f" %(5 , np.mean(accuracy)*100 , np.std(accuracy)*100))

print("%i Fold Cross Validation F1 = %2.2f \u00B1 %2.2f" %(5 , np.mean(F1)*100 , np.std(F1)*100))

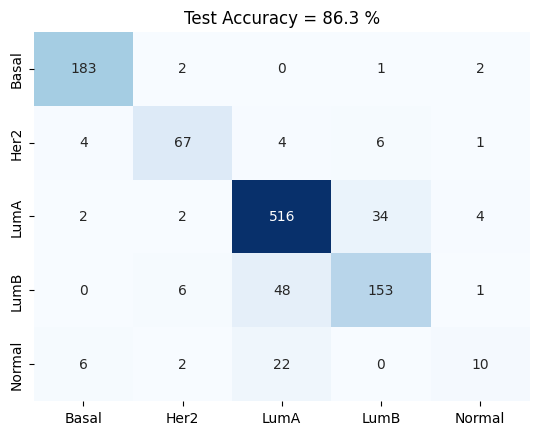

confusion_matrix(test_logits , test_labels , meta.astype('category').cat.categories)

plt.title('Test Accuracy = %2.1f %%' % (np.mean(accuracy)*100))

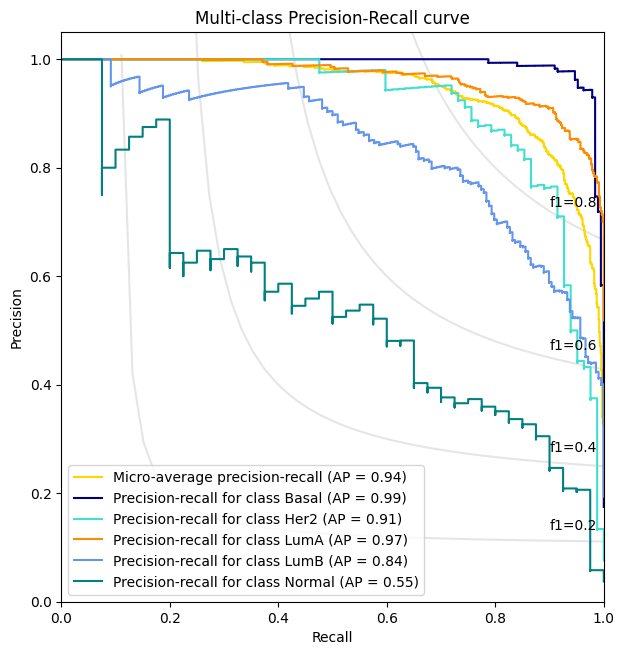

precision_recall_plot , all_predictions_conf = AUROC(test_logits, test_labels , meta)

node_predictions = []

node_true = []

display_label = meta.astype('category').cat.categories

for pred , true in zip(all_predictions_conf.argmax(1) , list(test_labels.detach().cpu().argmax(1).numpy())) :

node_predictions.append(display_label[pred])

node_true.append(display_label[true])

tst = pd.DataFrame({'Actual' : node_true , 'Predicted' : node_predictions})

Using cuda device

Total = 6.4Gb Reserved = 0.0Gb Allocated = 0.0Gb

StratifiedKFold(n_splits=3, random_state=None, shuffle=True)

GCN_MME(

(encoder_dims): ModuleList(

(0): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=464, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

(1): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=29995, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

)

(gnnlayers): ModuleList(

(0): GraphConv(in=64, out=32, normalization=both, activation=None)

(1): GraphConv(in=32, out=5, normalization=both, activation=None)

)

(batch_norms): ModuleList(

(0): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

Graph(num_nodes=1076, num_edges=18312,

ndata_schemes={'idx': Scheme(shape=(), dtype=torch.int64), 'label': Scheme(shape=(5,), dtype=torch.int64), 'feat': Scheme(shape=(30459,), dtype=torch.float32)}

edata_schemes={})

Epoch 00000 | Loss 1.6488 | Train Acc. 0.3291 |

Epoch 00005 | Loss 0.5487 | Train Acc. 0.8438 |

Epoch 00010 | Loss 0.5044 | Train Acc. 0.8368 |

Epoch 00015 | Loss 0.4624 | Train Acc. 0.8438 |

Epoch 00020 | Loss 0.4406 | Train Acc. 0.8480 |

Epoch 00025 | Loss 0.4275 | Train Acc. 0.8424 |

Epoch 00030 | Loss 0.3965 | Train Acc. 0.8550 |

Epoch 00035 | Loss 0.3763 | Train Acc. 0.8508 |

Epoch 00040 | Loss 0.3726 | Train Acc. 0.8605 |

Epoch 00045 | Loss 0.3778 | Train Acc. 0.8550 |

Epoch 00050 | Loss 0.3595 | Train Acc. 0.8787 |

Epoch 00055 | Loss 0.3680 | Train Acc. 0.8536 |

Epoch 00060 | Loss 0.3507 | Train Acc. 0.8619 |

Epoch 00065 | Loss 0.3400 | Train Acc. 0.8731 |

Epoch 00070 | Loss 0.3360 | Train Acc. 0.8745 |

Epoch 00075 | Loss 0.3389 | Train Acc. 0.8717 |

Epoch 00080 | Loss 0.3234 | Train Acc. 0.8773 |

Epoch 00085 | Loss 0.3367 | Train Acc. 0.8647 |

Epoch 00090 | Loss 0.3178 | Train Acc. 0.8884 |

Epoch 00095 | Loss 0.3166 | Train Acc. 0.8731 |

Epoch 00100 | Loss 0.3162 | Train Acc. 0.8828 |

Epoch 00105 | Loss 0.3172 | Train Acc. 0.8717 |

Epoch 00110 | Loss 0.3216 | Train Acc. 0.8717 |

Epoch 00115 | Loss 0.3181 | Train Acc. 0.8731 |

Epoch 00120 | Loss 0.3074 | Train Acc. 0.8828 |

Epoch 00125 | Loss 0.3078 | Train Acc. 0.8731 |

Epoch 00130 | Loss 0.3076 | Train Acc. 0.8870 |

Epoch 00135 | Loss 0.3094 | Train Acc. 0.8801 |

Epoch 00140 | Loss 0.3228 | Train Acc. 0.8689 |

Epoch 00145 | Loss 0.3073 | Train Acc. 0.8717 |

Epoch 00150 | Loss 0.3072 | Train Acc. 0.8815 |

Epoch 00155 | Loss 0.3086 | Train Acc. 0.8828 |

Epoch 00160 | Loss 0.3070 | Train Acc. 0.8801 |

Epoch 00165 | Loss 0.2999 | Train Acc. 0.8870 |

Epoch 00170 | Loss 0.2939 | Train Acc. 0.8940 |

Epoch 00175 | Loss 0.2972 | Train Acc. 0.8745 |

Epoch 00180 | Loss 0.3090 | Train Acc. 0.8870 |

Epoch 00185 | Loss 0.2926 | Train Acc. 0.8912 |

Epoch 00190 | Loss 0.2858 | Train Acc. 0.8912 |

Epoch 00195 | Loss 0.2921 | Train Acc. 0.8898 |

Epoch 00200 | Loss 0.2946 | Train Acc. 0.8815 |

Epoch 00205 | Loss 0.2877 | Train Acc. 0.8912 |

Epoch 00210 | Loss 0.2890 | Train Acc. 0.8856 |

Epoch 00215 | Loss 0.2780 | Train Acc. 0.8870 |

Epoch 00220 | Loss 0.2932 | Train Acc. 0.8898 |

Epoch 00225 | Loss 0.2903 | Train Acc. 0.8731 |

Epoch 00230 | Loss 0.2882 | Train Acc. 0.8912 |

Epoch 00235 | Loss 0.2926 | Train Acc. 0.8842 |

Epoch 00240 | Loss 0.2877 | Train Acc. 0.8898 |

Epoch 00245 | Loss 0.3062 | Train Acc. 0.8815 |

Epoch 00250 | Loss 0.2820 | Train Acc. 0.8954 |

Epoch 00255 | Loss 0.2872 | Train Acc. 0.8940 |

Epoch 00260 | Loss 0.2884 | Train Acc. 0.8842 |

Epoch 00265 | Loss 0.2816 | Train Acc. 0.8870 |

Epoch 00270 | Loss 0.2750 | Train Acc. 0.8815 |

Epoch 00275 | Loss 0.2848 | Train Acc. 0.8926 |

Epoch 00280 | Loss 0.2975 | Train Acc. 0.8870 |

Epoch 00285 | Loss 0.2929 | Train Acc. 0.8815 |

Epoch 00290 | Loss 0.2632 | Train Acc. 0.8968 |

Epoch 00295 | Loss 0.2877 | Train Acc. 0.8898 |

Fold : 1 | Test Accuracy = 0.8747 | F1 = 0.8500

Total = 6.4Gb Reserved = 0.8Gb Allocated = 0.3Gb

Clearing gpu memory

Total = 6.4Gb Reserved = 0.3Gb Allocated = 0.3Gb

GCN_MME(

(encoder_dims): ModuleList(

(0): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=464, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

(1): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=29995, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

)

(gnnlayers): ModuleList(

(0): GraphConv(in=64, out=32, normalization=both, activation=None)

(1): GraphConv(in=32, out=5, normalization=both, activation=None)

)

(batch_norms): ModuleList(

(0): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

Graph(num_nodes=1076, num_edges=18312,

ndata_schemes={'idx': Scheme(shape=(), dtype=torch.int64), 'label': Scheme(shape=(5,), dtype=torch.int64), 'feat': Scheme(shape=(30459,), dtype=torch.float32)}

edata_schemes={})

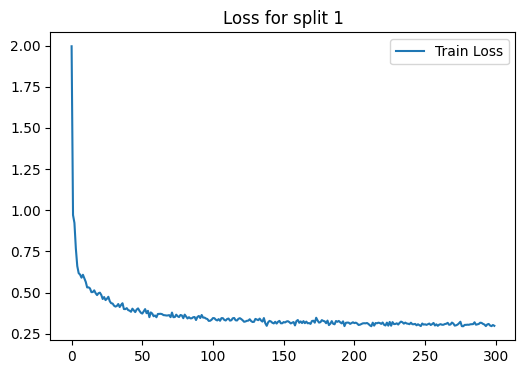

Epoch 00000 | Loss 1.9955 | Train Acc. 0.1953 |

Epoch 00005 | Loss 0.6177 | Train Acc. 0.8145 |

Epoch 00010 | Loss 0.5648 | Train Acc. 0.8047 |

Epoch 00015 | Loss 0.5031 | Train Acc. 0.8243 |

Epoch 00020 | Loss 0.4992 | Train Acc. 0.8117 |

Epoch 00025 | Loss 0.4625 | Train Acc. 0.8340 |

Epoch 00030 | Loss 0.4203 | Train Acc. 0.8396 |

Epoch 00035 | Loss 0.4249 | Train Acc. 0.8466 |

Epoch 00040 | Loss 0.3933 | Train Acc. 0.8591 |

Epoch 00045 | Loss 0.3812 | Train Acc. 0.8536 |

Epoch 00050 | Loss 0.3723 | Train Acc. 0.8619 |

Epoch 00055 | Loss 0.3504 | Train Acc. 0.8647 |

Epoch 00060 | Loss 0.3499 | Train Acc. 0.8787 |

Epoch 00065 | Loss 0.3629 | Train Acc. 0.8577 |

Epoch 00070 | Loss 0.3505 | Train Acc. 0.8731 |

Epoch 00075 | Loss 0.3559 | Train Acc. 0.8647 |

Epoch 00080 | Loss 0.3658 | Train Acc. 0.8577 |

Epoch 00085 | Loss 0.3437 | Train Acc. 0.8703 |

Epoch 00090 | Loss 0.3577 | Train Acc. 0.8689 |

Epoch 00095 | Loss 0.3422 | Train Acc. 0.8619 |

Epoch 00100 | Loss 0.3450 | Train Acc. 0.8675 |

Epoch 00105 | Loss 0.3286 | Train Acc. 0.8675 |

Epoch 00110 | Loss 0.3393 | Train Acc. 0.8605 |

Epoch 00115 | Loss 0.3454 | Train Acc. 0.8577 |

Epoch 00120 | Loss 0.3388 | Train Acc. 0.8675 |

Epoch 00125 | Loss 0.3295 | Train Acc. 0.8745 |

Epoch 00130 | Loss 0.3399 | Train Acc. 0.8647 |

Epoch 00135 | Loss 0.3234 | Train Acc. 0.8717 |

Epoch 00140 | Loss 0.3278 | Train Acc. 0.8717 |

Epoch 00145 | Loss 0.3115 | Train Acc. 0.8675 |

Epoch 00150 | Loss 0.3207 | Train Acc. 0.8773 |

Epoch 00155 | Loss 0.3124 | Train Acc. 0.8745 |

Epoch 00160 | Loss 0.3332 | Train Acc. 0.8647 |

Epoch 00165 | Loss 0.3139 | Train Acc. 0.8661 |

Epoch 00170 | Loss 0.3272 | Train Acc. 0.8759 |

Epoch 00175 | Loss 0.3174 | Train Acc. 0.8787 |

Epoch 00180 | Loss 0.3138 | Train Acc. 0.8745 |

Epoch 00185 | Loss 0.3104 | Train Acc. 0.8828 |

Epoch 00190 | Loss 0.3193 | Train Acc. 0.8801 |

Epoch 00195 | Loss 0.3175 | Train Acc. 0.8745 |

Epoch 00200 | Loss 0.3142 | Train Acc. 0.8675 |

Epoch 00205 | Loss 0.3090 | Train Acc. 0.8856 |

Epoch 00210 | Loss 0.3089 | Train Acc. 0.8801 |

Epoch 00215 | Loss 0.3147 | Train Acc. 0.8731 |

Epoch 00220 | Loss 0.3181 | Train Acc. 0.8745 |

Epoch 00225 | Loss 0.3215 | Train Acc. 0.8759 |

Epoch 00230 | Loss 0.3131 | Train Acc. 0.8745 |

Epoch 00235 | Loss 0.3111 | Train Acc. 0.8745 |

Epoch 00240 | Loss 0.3168 | Train Acc. 0.8731 |

Epoch 00245 | Loss 0.3074 | Train Acc. 0.8898 |

Epoch 00250 | Loss 0.3071 | Train Acc. 0.8801 |

Epoch 00255 | Loss 0.3080 | Train Acc. 0.8759 |

Epoch 00260 | Loss 0.3050 | Train Acc. 0.8773 |

Epoch 00265 | Loss 0.3103 | Train Acc. 0.8773 |

Epoch 00270 | Loss 0.3118 | Train Acc. 0.8703 |

Epoch 00275 | Loss 0.3227 | Train Acc. 0.8801 |

Epoch 00280 | Loss 0.3048 | Train Acc. 0.8801 |

Epoch 00285 | Loss 0.3193 | Train Acc. 0.8773 |

Epoch 00290 | Loss 0.3155 | Train Acc. 0.8884 |

Epoch 00295 | Loss 0.3092 | Train Acc. 0.8870 |

<Figure size 640x480 with 0 Axes>

Fold : 2 | Test Accuracy = 0.8635 | F1 = 0.8340

Total = 6.4Gb Reserved = 0.9Gb Allocated = 0.4Gb

Clearing gpu memory

Total = 6.4Gb Reserved = 0.3Gb Allocated = 0.3Gb

GCN_MME(

(encoder_dims): ModuleList(

(0): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=464, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

(1): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=29995, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

)

(gnnlayers): ModuleList(

(0): GraphConv(in=64, out=32, normalization=both, activation=None)

(1): GraphConv(in=32, out=5, normalization=both, activation=None)

)

(batch_norms): ModuleList(

(0): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

Graph(num_nodes=1076, num_edges=18312,

ndata_schemes={'idx': Scheme(shape=(), dtype=torch.int64), 'label': Scheme(shape=(5,), dtype=torch.int64), 'feat': Scheme(shape=(30459,), dtype=torch.float32)}

edata_schemes={})

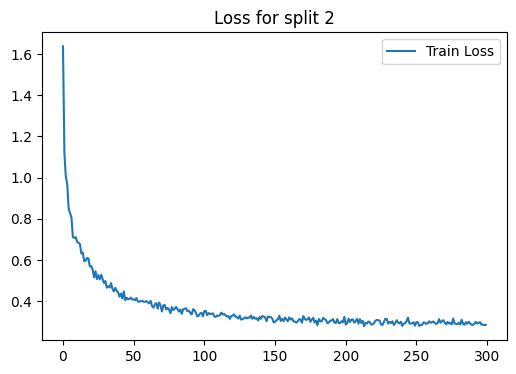

Epoch 00000 | Loss 1.6374 | Train Acc. 0.2925 |

Epoch 00005 | Loss 0.8284 | Train Acc. 0.7423 |

Epoch 00010 | Loss 0.6880 | Train Acc. 0.7994 |

Epoch 00015 | Loss 0.5944 | Train Acc. 0.8301 |

Epoch 00020 | Loss 0.5708 | Train Acc. 0.8162 |

Epoch 00025 | Loss 0.5267 | Train Acc. 0.8329 |

Epoch 00030 | Loss 0.4981 | Train Acc. 0.8468 |

Epoch 00035 | Loss 0.4592 | Train Acc. 0.8565 |

Epoch 00040 | Loss 0.4223 | Train Acc. 0.8565 |

Epoch 00045 | Loss 0.4175 | Train Acc. 0.8593 |

Epoch 00050 | Loss 0.4097 | Train Acc. 0.8621 |

Epoch 00055 | Loss 0.4006 | Train Acc. 0.8607 |

Epoch 00060 | Loss 0.3958 | Train Acc. 0.8663 |

Epoch 00065 | Loss 0.3843 | Train Acc. 0.8649 |

Epoch 00070 | Loss 0.3505 | Train Acc. 0.8649 |

Epoch 00075 | Loss 0.3592 | Train Acc. 0.8649 |

Epoch 00080 | Loss 0.3727 | Train Acc. 0.8649 |

Epoch 00085 | Loss 0.3613 | Train Acc. 0.8691 |

Epoch 00090 | Loss 0.3435 | Train Acc. 0.8788 |

Epoch 00095 | Loss 0.3270 | Train Acc. 0.8774 |

Epoch 00100 | Loss 0.3530 | Train Acc. 0.8760 |

Epoch 00105 | Loss 0.3390 | Train Acc. 0.8733 |

Epoch 00110 | Loss 0.3297 | Train Acc. 0.8788 |

Epoch 00115 | Loss 0.3317 | Train Acc. 0.8719 |

Epoch 00120 | Loss 0.3301 | Train Acc. 0.8691 |

Epoch 00125 | Loss 0.3306 | Train Acc. 0.8844 |

Epoch 00130 | Loss 0.3173 | Train Acc. 0.8886 |

Epoch 00135 | Loss 0.3239 | Train Acc. 0.8816 |

Epoch 00140 | Loss 0.3148 | Train Acc. 0.8928 |

Epoch 00145 | Loss 0.3263 | Train Acc. 0.8747 |

Epoch 00150 | Loss 0.3003 | Train Acc. 0.8886 |

Epoch 00155 | Loss 0.3147 | Train Acc. 0.8802 |

Epoch 00160 | Loss 0.3221 | Train Acc. 0.8788 |

Epoch 00165 | Loss 0.2964 | Train Acc. 0.8900 |

Epoch 00170 | Loss 0.3278 | Train Acc. 0.8649 |

Epoch 00175 | Loss 0.3006 | Train Acc. 0.8830 |

Epoch 00180 | Loss 0.2837 | Train Acc. 0.8816 |

Epoch 00185 | Loss 0.3136 | Train Acc. 0.8928 |

Epoch 00190 | Loss 0.3064 | Train Acc. 0.8830 |

Epoch 00195 | Loss 0.2943 | Train Acc. 0.8830 |

Epoch 00200 | Loss 0.2874 | Train Acc. 0.9011 |

Epoch 00205 | Loss 0.3118 | Train Acc. 0.8788 |

Epoch 00210 | Loss 0.3138 | Train Acc. 0.8858 |

Epoch 00215 | Loss 0.2926 | Train Acc. 0.8955 |

Epoch 00220 | Loss 0.2942 | Train Acc. 0.9039 |

Epoch 00225 | Loss 0.2872 | Train Acc. 0.8844 |

Epoch 00230 | Loss 0.2919 | Train Acc. 0.8872 |

Epoch 00235 | Loss 0.2935 | Train Acc. 0.8886 |

Epoch 00240 | Loss 0.2808 | Train Acc. 0.9025 |

Epoch 00245 | Loss 0.2929 | Train Acc. 0.8955 |

Epoch 00250 | Loss 0.2992 | Train Acc. 0.8858 |

Epoch 00255 | Loss 0.2974 | Train Acc. 0.8900 |

Epoch 00260 | Loss 0.2960 | Train Acc. 0.8886 |

Epoch 00265 | Loss 0.2951 | Train Acc. 0.8830 |

Epoch 00270 | Loss 0.2971 | Train Acc. 0.8872 |

Epoch 00275 | Loss 0.2878 | Train Acc. 0.8969 |

Epoch 00280 | Loss 0.2967 | Train Acc. 0.8955 |

Epoch 00285 | Loss 0.2985 | Train Acc. 0.8830 |

Epoch 00290 | Loss 0.2855 | Train Acc. 0.8928 |

Epoch 00295 | Loss 0.2990 | Train Acc. 0.8802 |

<Figure size 640x480 with 0 Axes>

Fold : 3 | Test Accuracy = 0.8520 | F1 = 0.8356

Total = 6.4Gb Reserved = 0.9Gb Allocated = 0.4Gb

Clearing gpu memory

Total = 6.4Gb Reserved = 0.3Gb Allocated = 0.3Gb

5 Fold Cross Validation Accuracy = 86.34 ± 0.93

5 Fold Cross Validation F1 = 83.99 ± 0.72

<Figure size 432x288 with 0 Axes>