MOGDx Free

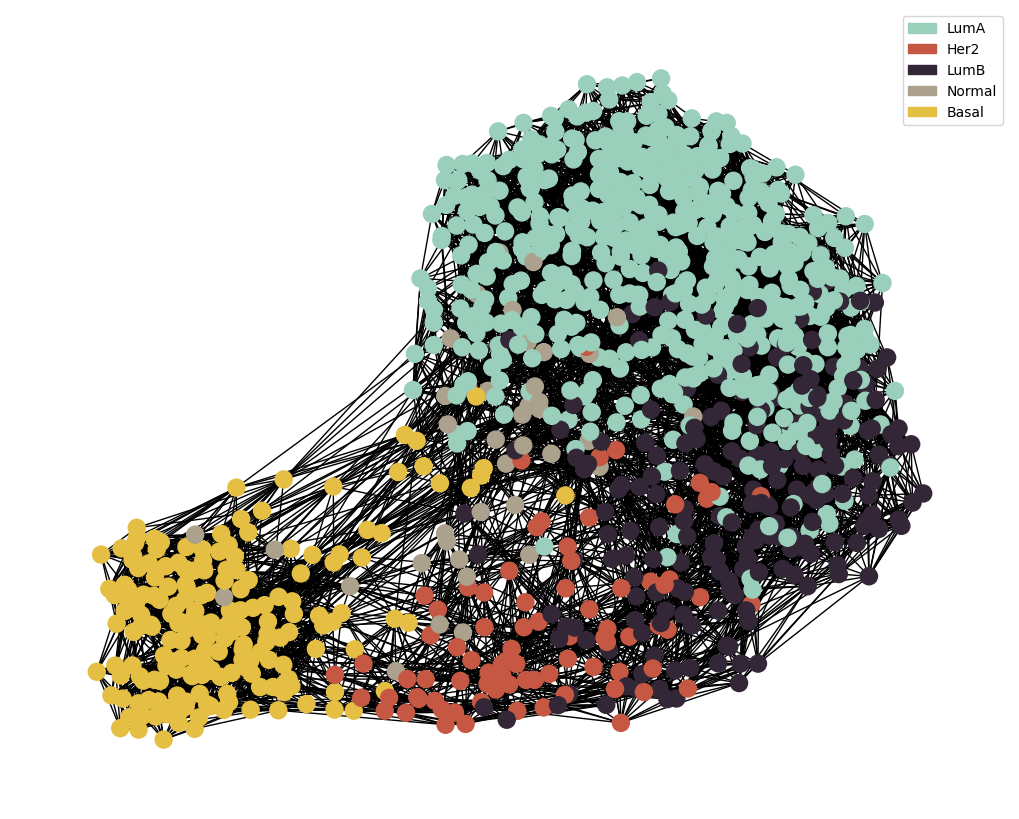

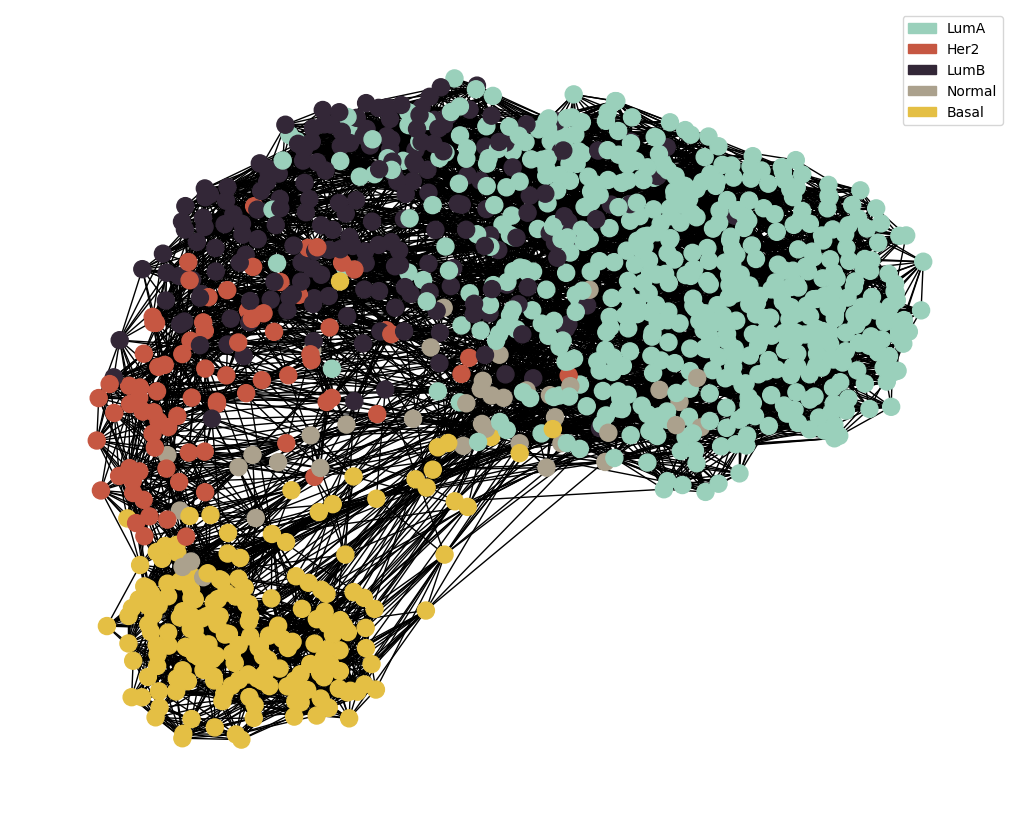

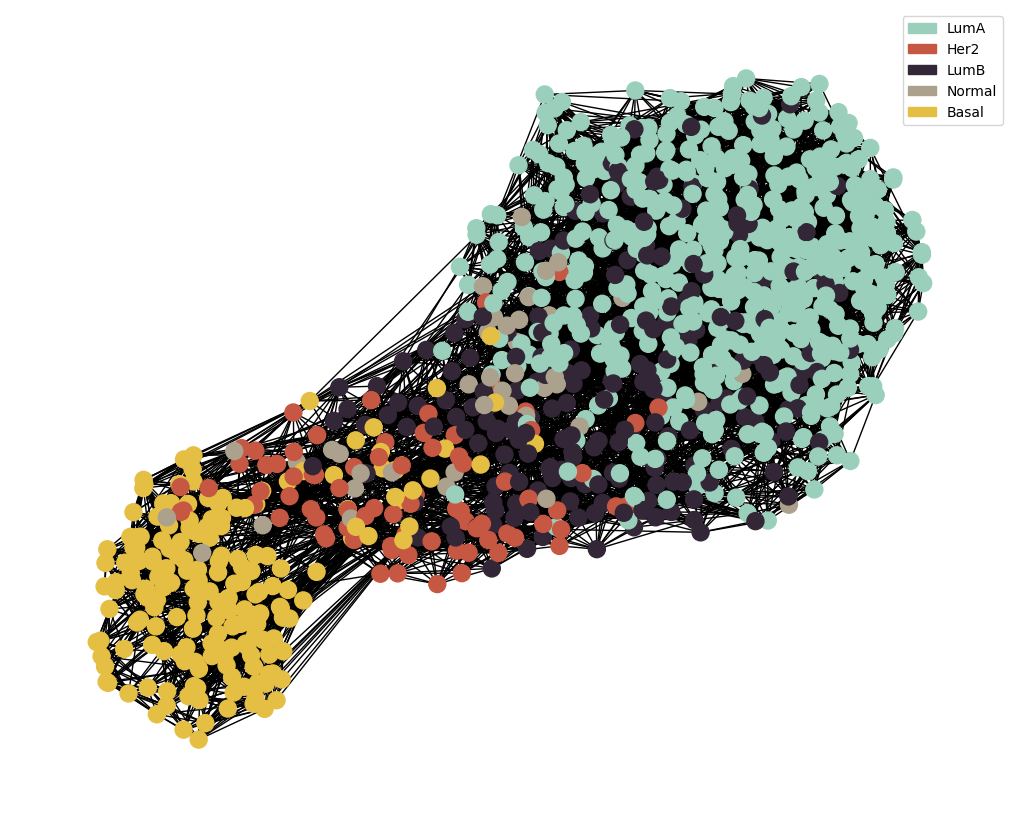

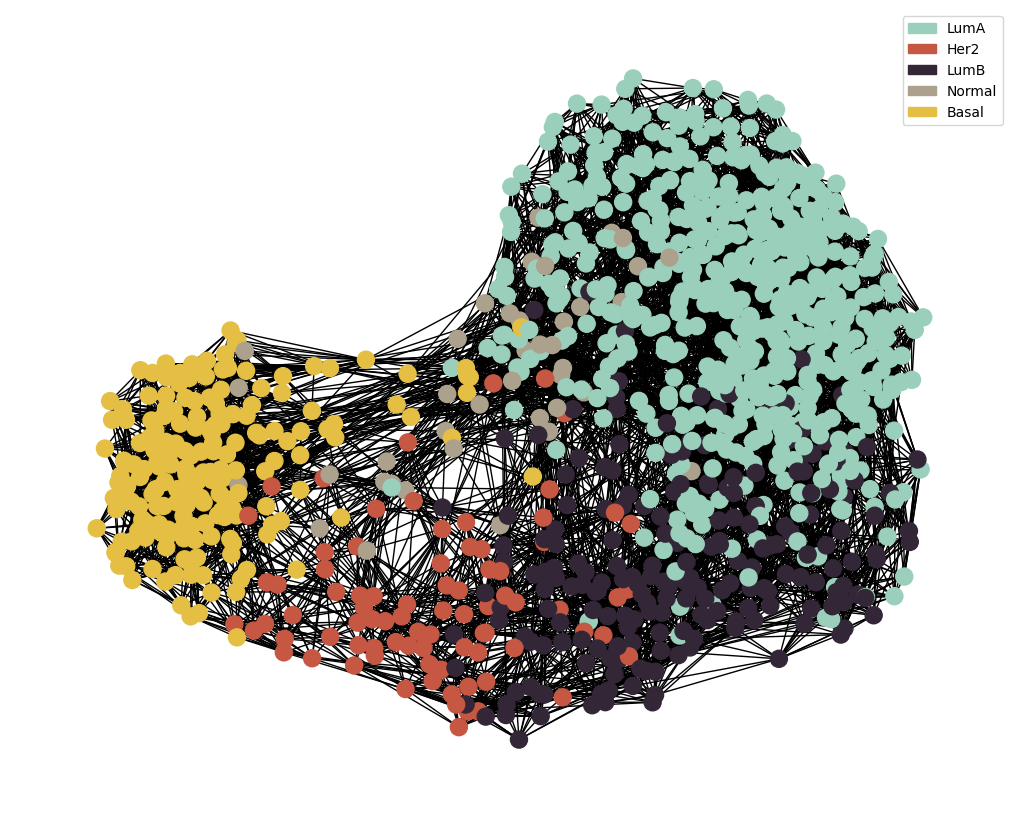

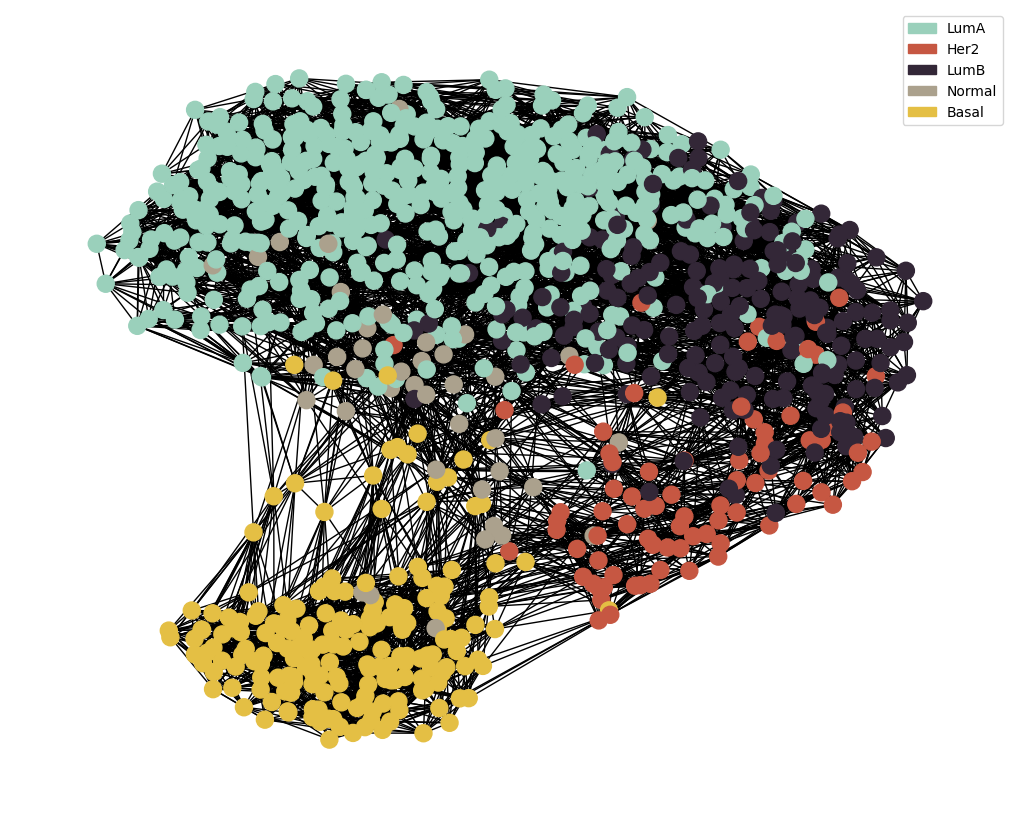

This architecture generate patient similairty networks directly from the embeddings.

Each model undergoes pretraining to generate reasonable embeddings. Distance is measured between patients in the embedding space and the model weights are reset. MOGDx is then trained as normal.

import pandas as pd

import numpy as np

import os

import sys

sys.path.insert(0 , './../')

from MAIN.utils import *

from MAIN.train import *

import MAIN.preprocess_functions

from MAIN.GNN_MME import GCN_MME , GSage_MME , GAT_MME

import torch

import torch.nn.functional as F

import dgl

from dgl.dataloading import MultiLayerFullNeighborSampler

import matplotlib.pyplot as plt

from sklearn.model_selection import StratifiedKFold

import networkx as nx

from datetime import datetime

import joblib

import warnings

import gc

import copy

warnings.filterwarnings("ignore")

print("Finished Library Import \n")

Finished Library Import

data_input = './../../data/TCGA/BRCA/raw/'

snf_net = 'RPPA_mRNA_graph.graphml'

index_col = 'index'

target = 'paper_BRCA_Subtype_PAM50'

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')# Get GPU device name, else use CPU

print("Using %s device" % device)

get_gpu_memory()

datModalities , meta = data_parsing(data_input , ['RPPA', 'mRNA'] , target , index_col)

graph_file = data_input + '../Networks/' + snf_net

g = nx.read_graphml(graph_file)

meta = meta.loc[sorted(meta.index)]

label = F.one_hot(torch.Tensor(list(meta.astype('category').cat.codes)).to(torch.int64))

skf = StratifiedKFold(n_splits=5 , shuffle=True)

print(skf)

MME_input_shapes = [datModalities[mod].shape[1] for mod in datModalities]

h = reduce(merge_dfs , list(datModalities.values()))

h = h.loc[sorted(h.index)]

del datModalities

gc.collect()

output_metrics = []

test_logits = []

test_labels = []

for i, (train_index, test_index) in enumerate(skf.split(meta.index, meta)) :

model = GCN_MME(MME_input_shapes , [16 , 16] , 64 , [32] , len(meta.unique())).to(device)

g = dgl.graph(([], []) , num_nodes=len(meta))

g = dgl.add_self_loop(g)

g.ndata['feat'] = torch.Tensor(h.to_numpy())

g.ndata['label'] = label

g = g.to(device)

print(model)

print(g)

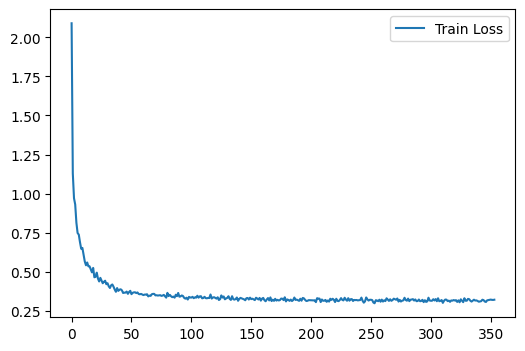

G = train(g, train_index, device , model , meta , 500 , 1e-3 , 20, pretrain=True)

sampler = NeighborSampler(

[15 for i in range(len(model.gnnlayers))], # fanout for each layer

prefetch_node_feats=['feat'],

prefetch_labels=['label'],

)

test_dataloader = DataLoader(

g,

torch.Tensor(test_index).to(torch.int64).to(device),

sampler,

device=device,

batch_size=1024,

shuffle=True,

drop_last=False,

num_workers=0,

use_uva=False,

)

test_output_metrics = evaluate(model , g, test_dataloader)

print(

"Pretraining | Loss = {:.4f} | Accuracy = {:.4f} ".format(

test_output_metrics[0] , test_output_metrics[1] )

)

with torch.no_grad():

torch.cuda.empty_cache()

gc.collect()

model = model.apply(init_weights)

g = dgl.from_networkx(G , node_attrs=['idx' , 'label'])

g.ndata['feat'] = torch.Tensor(h.to_numpy())

g.ndata['label'] = label

g = g.to(device)

print(g)

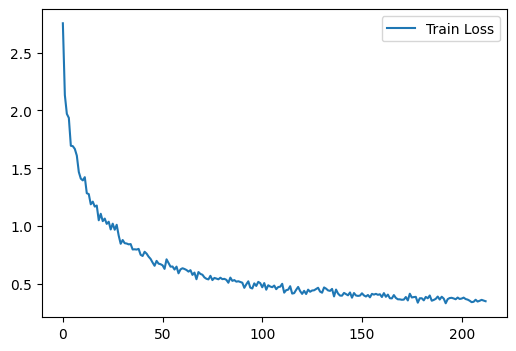

loss_plot = train(g, train_index, device , model , meta , 2000 , 1e-3 , 100)

sampler = NeighborSampler(

[15 for i in range(len(model.gnnlayers))], # fanout for each layer

prefetch_node_feats=['feat'],

prefetch_labels=['label'],

)

test_dataloader = DataLoader(

g,

torch.Tensor(test_index).to(torch.int64).to(device),

sampler,

device=device,

batch_size=1024,

shuffle=True,

drop_last=False,

num_workers=0,

use_uva=False,

)

test_output_metrics = evaluate(model , g, test_dataloader)

print(

"Fold : {:01d} | Test Accuracy = {:.4f} | F1 = {:.4f} ".format(

i+1 , test_output_metrics[1] , test_output_metrics[2] )

)

test_logits.extend(test_output_metrics[-2])

test_labels.extend(test_output_metrics[-1])

output_metrics.append(test_output_metrics)

if i == 0 :

best_model = model

best_idx = i

elif output_metrics[best_idx][1] < test_output_metrics[1] :

best_model = model

best_idx = i

get_gpu_memory()

del model

gc.collect()

torch.cuda.empty_cache()

print('Clearing gpu memory')

get_gpu_memory()

test_logits = torch.stack(test_logits)

test_labels = torch.stack(test_labels)

accuracy = []

F1 = []

i = 0

for metric in output_metrics :

accuracy.append(metric[1])

F1.append(metric[2])

print("%i Fold Cross Validation Accuracy = %2.2f \u00B1 %2.2f" %(5 , np.mean(accuracy)*100 , np.std(accuracy)*100))

print("%i Fold Cross Validation F1 = %2.2f \u00B1 %2.2f" %(5 , np.mean(F1)*100 , np.std(F1)*100))

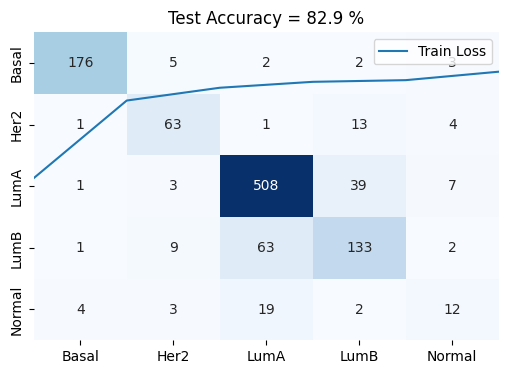

confusion_matrix(test_logits , test_labels , meta.astype('category').cat.categories)

plt.title('Test Accuracy = %2.1f %%' % (np.mean(accuracy)*100))

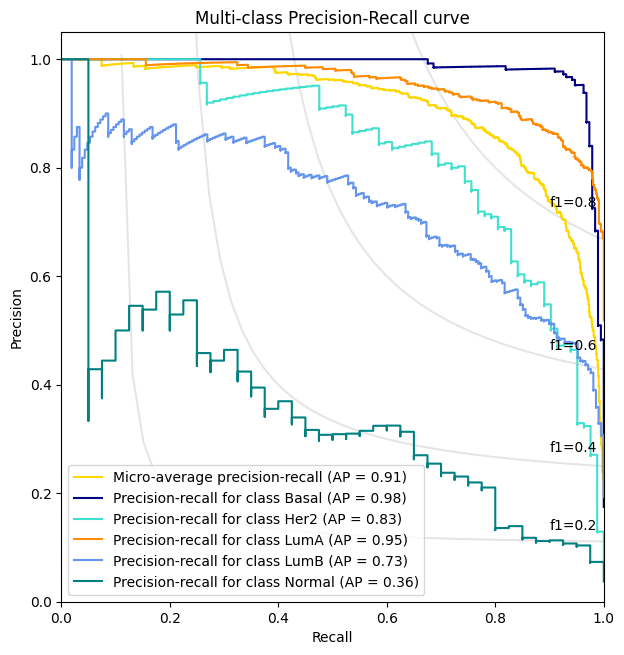

precision_recall_plot , all_predictions_conf = AUROC(test_logits, test_labels , meta)

node_predictions = []

node_true = []

display_label = meta.astype('category').cat.categories

for pred , true in zip(all_predictions_conf.argmax(1) , list(test_labels.detach().cpu().argmax(1).numpy())) :

node_predictions.append(display_label[pred])

node_true.append(display_label[true])

tst = pd.DataFrame({'Actual' : node_true , 'Predicted' : node_predictions})

Using cuda device

Total = 42.4Gb Reserved = 0.8Gb Allocated = 0.3Gb

StratifiedKFold(n_splits=5, random_state=None, shuffle=True)

GCN_MME(

(encoder_dims): ModuleList(

(0): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=464, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

(1): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=29995, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

)

(gnnlayers): ModuleList(

(0): GraphConv(in=64, out=32, normalization=both, activation=None)

(1): GraphConv(in=32, out=5, normalization=both, activation=None)

)

(batch_norms): ModuleList(

(0): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

Graph(num_nodes=1076, num_edges=1076,

ndata_schemes={'feat': Scheme(shape=(30459,), dtype=torch.float32), 'label': Scheme(shape=(5,), dtype=torch.int64)}

edata_schemes={})

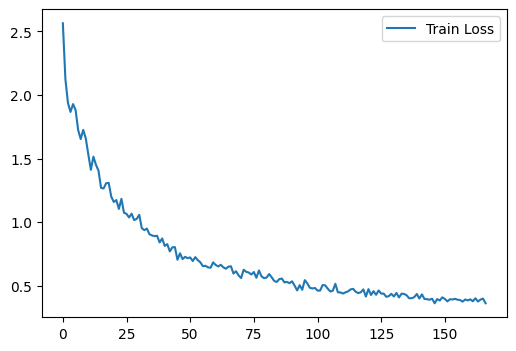

Epoch 00000 | Loss 2.7527 | Train Acc. 0.2128 |

Epoch 00005 | Loss 1.6914 | Train Acc. 0.4186 |

Epoch 00010 | Loss 1.3957 | Train Acc. 0.5012 |

Epoch 00015 | Loss 1.2123 | Train Acc. 0.5744 |

Epoch 00020 | Loss 1.0439 | Train Acc. 0.6267 |

Epoch 00025 | Loss 1.0219 | Train Acc. 0.6372 |

Epoch 00030 | Loss 0.8807 | Train Acc. 0.6895 |

Epoch 00035 | Loss 0.7992 | Train Acc. 0.7093 |

Epoch 00040 | Loss 0.7428 | Train Acc. 0.7384 |

Epoch 00045 | Loss 0.6863 | Train Acc. 0.7593 |

Epoch 00050 | Loss 0.6625 | Train Acc. 0.7767 |

Epoch 00055 | Loss 0.6522 | Train Acc. 0.7849 |

Epoch 00060 | Loss 0.6370 | Train Acc. 0.7884 |

Epoch 00065 | Loss 0.5796 | Train Acc. 0.8058 |

Epoch 00070 | Loss 0.5809 | Train Acc. 0.8047 |

Epoch 00075 | Loss 0.5348 | Train Acc. 0.8244 |

Epoch 00080 | Loss 0.5427 | Train Acc. 0.8174 |

Epoch 00085 | Loss 0.5280 | Train Acc. 0.8291 |

Epoch 00090 | Loss 0.5121 | Train Acc. 0.8209 |

Epoch 00095 | Loss 0.4624 | Train Acc. 0.8523 |

Epoch 00100 | Loss 0.4727 | Train Acc. 0.8535 |

Epoch 00105 | Loss 0.4734 | Train Acc. 0.8500 |

Epoch 00110 | Loss 0.5027 | Train Acc. 0.8186 |

Epoch 00115 | Loss 0.4183 | Train Acc. 0.8605 |

Epoch 00120 | Loss 0.4156 | Train Acc. 0.8779 |

Epoch 00125 | Loss 0.4449 | Train Acc. 0.8640 |

Epoch 00130 | Loss 0.4256 | Train Acc. 0.8570 |

Epoch 00135 | Loss 0.4586 | Train Acc. 0.8593 |

Epoch 00140 | Loss 0.4002 | Train Acc. 0.8767 |

Epoch 00145 | Loss 0.3828 | Train Acc. 0.8709 |

Epoch 00150 | Loss 0.4206 | Train Acc. 0.8616 |

Epoch 00155 | Loss 0.4157 | Train Acc. 0.8616 |

Epoch 00160 | Loss 0.3886 | Train Acc. 0.8733 |

Epoch 00165 | Loss 0.3768 | Train Acc. 0.8814 |

Epoch 00170 | Loss 0.3646 | Train Acc. 0.8791 |

Epoch 00175 | Loss 0.3845 | Train Acc. 0.8616 |

Epoch 00180 | Loss 0.3778 | Train Acc. 0.8837 |

Epoch 00185 | Loss 0.3566 | Train Acc. 0.8791 |

Epoch 00190 | Loss 0.3909 | Train Acc. 0.8733 |

Epoch 00195 | Loss 0.3816 | Train Acc. 0.8721 |

Epoch 00200 | Loss 0.3753 | Train Acc. 0.8791 |

Epoch 00205 | Loss 0.3441 | Train Acc. 0.8907 |

Epoch 00210 | Loss 0.3639 | Train Acc. 0.8907 |

Early stopping! No improvement for 20 consecutive epochs.

Pretraining | Loss = 0.4295 | Accuracy = 0.8472

Graph(num_nodes=1076, num_edges=18070,

ndata_schemes={'idx': Scheme(shape=(), dtype=torch.int64), 'label': Scheme(shape=(5,), dtype=torch.int64), 'feat': Scheme(shape=(30459,), dtype=torch.float32)}

edata_schemes={})

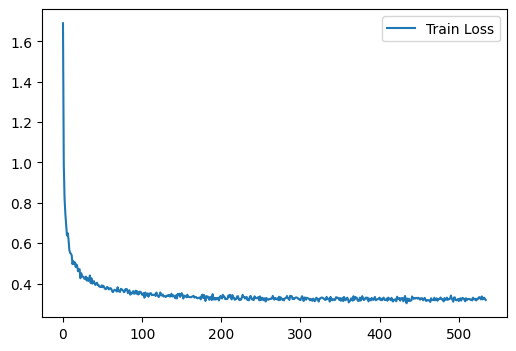

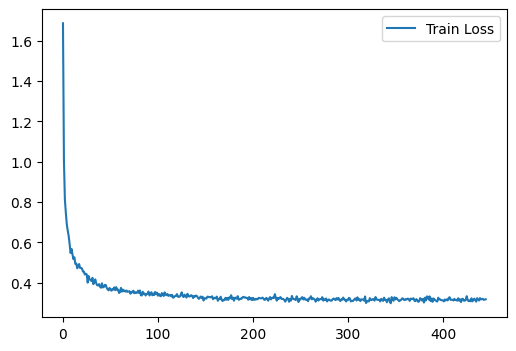

Epoch 00000 | Loss 1.6894 | Train Acc. 0.2360 |

Epoch 00005 | Loss 0.6380 | Train Acc. 0.8151 |

Epoch 00010 | Loss 0.5482 | Train Acc. 0.8360 |

Epoch 00015 | Loss 0.5048 | Train Acc. 0.8395 |

Epoch 00020 | Loss 0.4710 | Train Acc. 0.8477 |

Epoch 00025 | Loss 0.4344 | Train Acc. 0.8558 |

Epoch 00030 | Loss 0.4149 | Train Acc. 0.8686 |

Epoch 00035 | Loss 0.4022 | Train Acc. 0.8674 |

Epoch 00040 | Loss 0.3994 | Train Acc. 0.8698 |

Epoch 00045 | Loss 0.3920 | Train Acc. 0.8593 |

Epoch 00050 | Loss 0.3821 | Train Acc. 0.8686 |

Epoch 00055 | Loss 0.3768 | Train Acc. 0.8721 |

Epoch 00060 | Loss 0.3771 | Train Acc. 0.8686 |

Epoch 00065 | Loss 0.3691 | Train Acc. 0.8709 |

Epoch 00070 | Loss 0.3616 | Train Acc. 0.8721 |

Epoch 00075 | Loss 0.3690 | Train Acc. 0.8733 |

Epoch 00080 | Loss 0.3681 | Train Acc. 0.8698 |

Epoch 00085 | Loss 0.3448 | Train Acc. 0.8826 |

Epoch 00090 | Loss 0.3490 | Train Acc. 0.8849 |

Epoch 00095 | Loss 0.3456 | Train Acc. 0.8767 |

Epoch 00100 | Loss 0.3440 | Train Acc. 0.8826 |

Epoch 00105 | Loss 0.3454 | Train Acc. 0.8756 |

Epoch 00110 | Loss 0.3515 | Train Acc. 0.8744 |

Epoch 00115 | Loss 0.3427 | Train Acc. 0.8779 |

Epoch 00120 | Loss 0.3386 | Train Acc. 0.8779 |

Epoch 00125 | Loss 0.3411 | Train Acc. 0.8837 |

Epoch 00130 | Loss 0.3331 | Train Acc. 0.8791 |

Epoch 00135 | Loss 0.3353 | Train Acc. 0.8860 |

Epoch 00140 | Loss 0.3407 | Train Acc. 0.8826 |

Epoch 00145 | Loss 0.3484 | Train Acc. 0.8733 |

Epoch 00150 | Loss 0.3521 | Train Acc. 0.8744 |

Epoch 00155 | Loss 0.3353 | Train Acc. 0.8744 |

Epoch 00160 | Loss 0.3326 | Train Acc. 0.8744 |

Epoch 00165 | Loss 0.3394 | Train Acc. 0.8721 |

Epoch 00170 | Loss 0.3266 | Train Acc. 0.8884 |

Epoch 00175 | Loss 0.3384 | Train Acc. 0.8709 |

Epoch 00180 | Loss 0.3135 | Train Acc. 0.8849 |

Epoch 00185 | Loss 0.3191 | Train Acc. 0.8860 |

Epoch 00190 | Loss 0.3245 | Train Acc. 0.8860 |

Epoch 00195 | Loss 0.3227 | Train Acc. 0.8826 |

Epoch 00200 | Loss 0.3314 | Train Acc. 0.8721 |

Epoch 00205 | Loss 0.3226 | Train Acc. 0.8884 |

Epoch 00210 | Loss 0.3333 | Train Acc. 0.8744 |

Epoch 00215 | Loss 0.3276 | Train Acc. 0.8791 |

Epoch 00220 | Loss 0.3363 | Train Acc. 0.8767 |

Epoch 00225 | Loss 0.3203 | Train Acc. 0.8884 |

Epoch 00230 | Loss 0.3254 | Train Acc. 0.8779 |

Epoch 00235 | Loss 0.3166 | Train Acc. 0.8814 |

Epoch 00240 | Loss 0.3255 | Train Acc. 0.8837 |

Epoch 00245 | Loss 0.3269 | Train Acc. 0.8860 |

Epoch 00250 | Loss 0.3181 | Train Acc. 0.8826 |

Epoch 00255 | Loss 0.3266 | Train Acc. 0.8907 |

Epoch 00260 | Loss 0.3212 | Train Acc. 0.8826 |

Epoch 00265 | Loss 0.3388 | Train Acc. 0.8698 |

Epoch 00270 | Loss 0.3223 | Train Acc. 0.8837 |

Epoch 00275 | Loss 0.3317 | Train Acc. 0.8698 |

Epoch 00280 | Loss 0.3249 | Train Acc. 0.8733 |

Epoch 00285 | Loss 0.3279 | Train Acc. 0.8849 |

Epoch 00290 | Loss 0.3235 | Train Acc. 0.8721 |

Epoch 00295 | Loss 0.3309 | Train Acc. 0.8860 |

Epoch 00300 | Loss 0.3176 | Train Acc. 0.8849 |

Epoch 00305 | Loss 0.3306 | Train Acc. 0.8884 |

Epoch 00310 | Loss 0.3250 | Train Acc. 0.8849 |

Epoch 00315 | Loss 0.3141 | Train Acc. 0.8872 |

Epoch 00320 | Loss 0.3297 | Train Acc. 0.8872 |

Epoch 00325 | Loss 0.3255 | Train Acc. 0.8791 |

Epoch 00330 | Loss 0.3249 | Train Acc. 0.8837 |

Epoch 00335 | Loss 0.3236 | Train Acc. 0.8814 |

Epoch 00340 | Loss 0.3170 | Train Acc. 0.8919 |

Epoch 00345 | Loss 0.3324 | Train Acc. 0.8721 |

Epoch 00350 | Loss 0.3282 | Train Acc. 0.8860 |

Epoch 00355 | Loss 0.3338 | Train Acc. 0.8849 |

Epoch 00360 | Loss 0.3235 | Train Acc. 0.8849 |

Epoch 00365 | Loss 0.3211 | Train Acc. 0.8826 |

Epoch 00370 | Loss 0.3277 | Train Acc. 0.8860 |

Epoch 00375 | Loss 0.3303 | Train Acc. 0.8698 |

Epoch 00380 | Loss 0.3181 | Train Acc. 0.8767 |

Epoch 00385 | Loss 0.3299 | Train Acc. 0.8860 |

Epoch 00390 | Loss 0.3287 | Train Acc. 0.8767 |

Epoch 00395 | Loss 0.3175 | Train Acc. 0.8837 |

Epoch 00400 | Loss 0.3302 | Train Acc. 0.8814 |

Epoch 00405 | Loss 0.3302 | Train Acc. 0.8779 |

Epoch 00410 | Loss 0.3146 | Train Acc. 0.8895 |

Epoch 00415 | Loss 0.3291 | Train Acc. 0.8826 |

Epoch 00420 | Loss 0.3212 | Train Acc. 0.8814 |

Epoch 00425 | Loss 0.3283 | Train Acc. 0.8826 |

Epoch 00430 | Loss 0.3119 | Train Acc. 0.8965 |

Epoch 00435 | Loss 0.3257 | Train Acc. 0.8744 |

Epoch 00440 | Loss 0.3159 | Train Acc. 0.8814 |

Epoch 00445 | Loss 0.3257 | Train Acc. 0.8884 |

Epoch 00450 | Loss 0.3254 | Train Acc. 0.8733 |

Epoch 00455 | Loss 0.3168 | Train Acc. 0.8791 |

Epoch 00460 | Loss 0.3170 | Train Acc. 0.8779 |

Epoch 00465 | Loss 0.3271 | Train Acc. 0.8802 |

Epoch 00470 | Loss 0.3170 | Train Acc. 0.8919 |

Epoch 00475 | Loss 0.3220 | Train Acc. 0.8837 |

Epoch 00480 | Loss 0.3167 | Train Acc. 0.8849 |

Epoch 00485 | Loss 0.3302 | Train Acc. 0.8756 |

Epoch 00490 | Loss 0.3404 | Train Acc. 0.8663 |

Epoch 00495 | Loss 0.3313 | Train Acc. 0.8872 |

Epoch 00500 | Loss 0.3131 | Train Acc. 0.8860 |

Epoch 00505 | Loss 0.3289 | Train Acc. 0.8860 |

Epoch 00510 | Loss 0.3175 | Train Acc. 0.8942 |

Epoch 00515 | Loss 0.3190 | Train Acc. 0.8814 |

Epoch 00520 | Loss 0.3209 | Train Acc. 0.8884 |

Epoch 00525 | Loss 0.3297 | Train Acc. 0.8814 |

Epoch 00530 | Loss 0.3217 | Train Acc. 0.8837 |

Early stopping! No improvement for 100 consecutive epochs.

Fold : 1 | Test Accuracy = 0.8657 | F1 = 0.8570

Total = 42.4Gb Reserved = 0.9Gb Allocated = 0.3Gb

Clearing gpu memory

Total = 42.4Gb Reserved = 0.4Gb Allocated = 0.3Gb

GCN_MME(

(encoder_dims): ModuleList(

(0): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=464, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

(1): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=29995, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

)

(gnnlayers): ModuleList(

(0): GraphConv(in=64, out=32, normalization=both, activation=None)

(1): GraphConv(in=32, out=5, normalization=both, activation=None)

)

(batch_norms): ModuleList(

(0): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

Graph(num_nodes=1076, num_edges=1076,

ndata_schemes={'feat': Scheme(shape=(30459,), dtype=torch.float32), 'label': Scheme(shape=(5,), dtype=torch.int64)}

edata_schemes={})

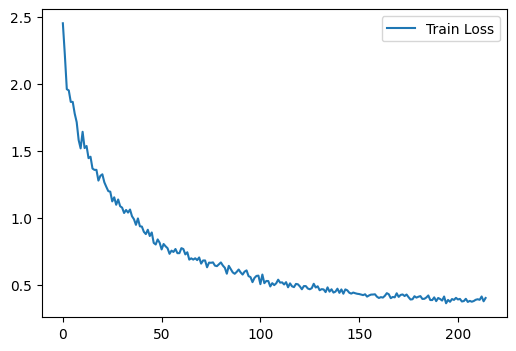

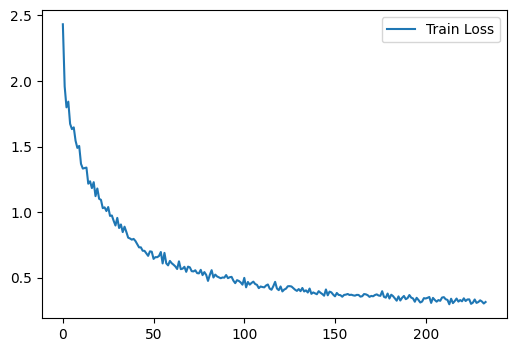

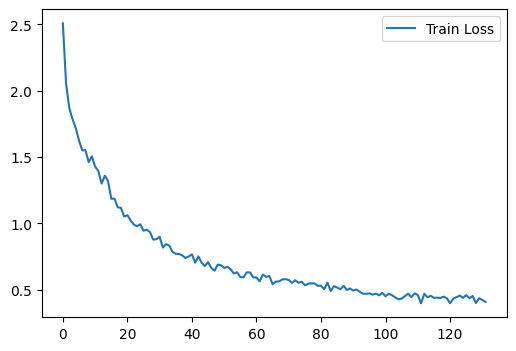

Epoch 00000 | Loss 2.4511 | Train Acc. 0.1882 |

Epoch 00005 | Loss 1.8642 | Train Acc. 0.3101 |

Epoch 00010 | Loss 1.6411 | Train Acc. 0.3972 |

Epoch 00015 | Loss 1.3667 | Train Acc. 0.4762 |

Epoch 00020 | Loss 1.3237 | Train Acc. 0.5110 |

Epoch 00025 | Loss 1.1216 | Train Acc. 0.5889 |

Epoch 00030 | Loss 1.0750 | Train Acc. 0.5854 |

Epoch 00035 | Loss 1.0108 | Train Acc. 0.6144 |

Epoch 00040 | Loss 0.9336 | Train Acc. 0.6632 |

Epoch 00045 | Loss 0.8896 | Train Acc. 0.6934 |

Epoch 00050 | Loss 0.7629 | Train Acc. 0.7410 |

Epoch 00055 | Loss 0.7540 | Train Acc. 0.7305 |

Epoch 00060 | Loss 0.7725 | Train Acc. 0.7445 |

Epoch 00065 | Loss 0.6965 | Train Acc. 0.7689 |

Epoch 00070 | Loss 0.6570 | Train Acc. 0.7724 |

Epoch 00075 | Loss 0.6633 | Train Acc. 0.7677 |

Epoch 00080 | Loss 0.6659 | Train Acc. 0.7689 |

Epoch 00085 | Loss 0.6167 | Train Acc. 0.8049 |

Epoch 00090 | Loss 0.5915 | Train Acc. 0.7979 |

Epoch 00095 | Loss 0.5562 | Train Acc. 0.8188 |

Epoch 00100 | Loss 0.5035 | Train Acc. 0.8409 |

Epoch 00105 | Loss 0.4866 | Train Acc. 0.8467 |

Epoch 00110 | Loss 0.5151 | Train Acc. 0.8200 |

Epoch 00115 | Loss 0.5096 | Train Acc. 0.8397 |

Epoch 00120 | Loss 0.4868 | Train Acc. 0.8467 |

Epoch 00125 | Loss 0.4656 | Train Acc. 0.8490 |

Epoch 00130 | Loss 0.4583 | Train Acc. 0.8560 |

Epoch 00135 | Loss 0.4480 | Train Acc. 0.8595 |

Epoch 00140 | Loss 0.4389 | Train Acc. 0.8734 |

Epoch 00145 | Loss 0.4397 | Train Acc. 0.8757 |

Epoch 00150 | Loss 0.4299 | Train Acc. 0.8734 |

Epoch 00155 | Loss 0.4195 | Train Acc. 0.8688 |

Epoch 00160 | Loss 0.4006 | Train Acc. 0.8780 |

Epoch 00165 | Loss 0.4282 | Train Acc. 0.8676 |

Epoch 00170 | Loss 0.4086 | Train Acc. 0.8711 |

Epoch 00175 | Loss 0.4066 | Train Acc. 0.8839 |

Epoch 00180 | Loss 0.4098 | Train Acc. 0.8757 |

Epoch 00185 | Loss 0.4192 | Train Acc. 0.8722 |

Epoch 00190 | Loss 0.4005 | Train Acc. 0.8873 |

Epoch 00195 | Loss 0.3862 | Train Acc. 0.8955 |

Epoch 00200 | Loss 0.3895 | Train Acc. 0.8966 |

Epoch 00205 | Loss 0.3710 | Train Acc. 0.8966 |

Epoch 00210 | Loss 0.3912 | Train Acc. 0.8827 |

Early stopping! No improvement for 20 consecutive epochs.

Pretraining | Loss = 0.5478 | Accuracy = 0.8419

Graph(num_nodes=1076, num_edges=18178,

ndata_schemes={'idx': Scheme(shape=(), dtype=torch.int64), 'label': Scheme(shape=(5,), dtype=torch.int64), 'feat': Scheme(shape=(30459,), dtype=torch.float32)}

edata_schemes={})

Epoch 00000 | Loss 2.0441 | Train Acc. 0.1150 |

Epoch 00005 | Loss 0.6583 | Train Acc. 0.8014 |

Epoch 00010 | Loss 0.5490 | Train Acc. 0.8304 |

Epoch 00015 | Loss 0.4682 | Train Acc. 0.8479 |

Epoch 00020 | Loss 0.4292 | Train Acc. 0.8641 |

Epoch 00025 | Loss 0.4143 | Train Acc. 0.8699 |

Epoch 00030 | Loss 0.3907 | Train Acc. 0.8722 |

Epoch 00035 | Loss 0.3699 | Train Acc. 0.8734 |

Epoch 00040 | Loss 0.3548 | Train Acc. 0.8827 |

Epoch 00045 | Loss 0.3561 | Train Acc. 0.8839 |

Epoch 00050 | Loss 0.3422 | Train Acc. 0.8792 |

Epoch 00055 | Loss 0.3370 | Train Acc. 0.8862 |

Epoch 00060 | Loss 0.3369 | Train Acc. 0.8815 |

Epoch 00065 | Loss 0.3422 | Train Acc. 0.8943 |

Epoch 00070 | Loss 0.3417 | Train Acc. 0.8804 |

Epoch 00075 | Loss 0.3471 | Train Acc. 0.8850 |

Epoch 00080 | Loss 0.3196 | Train Acc. 0.8920 |

Epoch 00085 | Loss 0.3276 | Train Acc. 0.8908 |

Epoch 00090 | Loss 0.3212 | Train Acc. 0.8897 |

Epoch 00095 | Loss 0.3252 | Train Acc. 0.8873 |

Epoch 00100 | Loss 0.3015 | Train Acc. 0.9001 |

Epoch 00105 | Loss 0.3198 | Train Acc. 0.8873 |

Epoch 00110 | Loss 0.3087 | Train Acc. 0.8908 |

Epoch 00115 | Loss 0.3224 | Train Acc. 0.8862 |

Epoch 00120 | Loss 0.3042 | Train Acc. 0.8931 |

Epoch 00125 | Loss 0.3087 | Train Acc. 0.9013 |

Epoch 00130 | Loss 0.3222 | Train Acc. 0.8780 |

Epoch 00135 | Loss 0.3074 | Train Acc. 0.8955 |

Epoch 00140 | Loss 0.3150 | Train Acc. 0.8804 |

Epoch 00145 | Loss 0.3091 | Train Acc. 0.8908 |

Epoch 00150 | Loss 0.3136 | Train Acc. 0.8827 |

Epoch 00155 | Loss 0.3034 | Train Acc. 0.8931 |

Epoch 00160 | Loss 0.3013 | Train Acc. 0.8978 |

Epoch 00165 | Loss 0.2994 | Train Acc. 0.8966 |

Epoch 00170 | Loss 0.3001 | Train Acc. 0.8920 |

Epoch 00175 | Loss 0.2957 | Train Acc. 0.9001 |

Epoch 00180 | Loss 0.2978 | Train Acc. 0.8966 |

Epoch 00185 | Loss 0.3043 | Train Acc. 0.8873 |

Epoch 00190 | Loss 0.2924 | Train Acc. 0.9059 |

Epoch 00195 | Loss 0.3139 | Train Acc. 0.8873 |

Epoch 00200 | Loss 0.3038 | Train Acc. 0.8908 |

Epoch 00205 | Loss 0.3112 | Train Acc. 0.8908 |

Epoch 00210 | Loss 0.3064 | Train Acc. 0.8931 |

Epoch 00215 | Loss 0.3010 | Train Acc. 0.8931 |

Epoch 00220 | Loss 0.3015 | Train Acc. 0.8931 |

Epoch 00225 | Loss 0.2924 | Train Acc. 0.9024 |

Epoch 00230 | Loss 0.2924 | Train Acc. 0.9001 |

Epoch 00235 | Loss 0.2940 | Train Acc. 0.8966 |

Epoch 00240 | Loss 0.2856 | Train Acc. 0.8990 |

Epoch 00245 | Loss 0.3025 | Train Acc. 0.8873 |

Epoch 00250 | Loss 0.3092 | Train Acc. 0.8931 |

Epoch 00255 | Loss 0.2917 | Train Acc. 0.8943 |

Epoch 00260 | Loss 0.3078 | Train Acc. 0.8920 |

Epoch 00265 | Loss 0.2996 | Train Acc. 0.8908 |

Epoch 00270 | Loss 0.2946 | Train Acc. 0.8955 |

Epoch 00275 | Loss 0.2947 | Train Acc. 0.8966 |

Epoch 00280 | Loss 0.3038 | Train Acc. 0.9013 |

Epoch 00285 | Loss 0.2916 | Train Acc. 0.8955 |

Epoch 00290 | Loss 0.2949 | Train Acc. 0.8873 |

Epoch 00295 | Loss 0.2931 | Train Acc. 0.8966 |

Epoch 00300 | Loss 0.2986 | Train Acc. 0.8920 |

Epoch 00305 | Loss 0.3033 | Train Acc. 0.8931 |

Epoch 00310 | Loss 0.2892 | Train Acc. 0.8955 |

Epoch 00315 | Loss 0.2980 | Train Acc. 0.8955 |

Epoch 00320 | Loss 0.2892 | Train Acc. 0.9001 |

Epoch 00325 | Loss 0.2884 | Train Acc. 0.8990 |

Epoch 00330 | Loss 0.2809 | Train Acc. 0.9013 |

Epoch 00335 | Loss 0.2923 | Train Acc. 0.8931 |

Epoch 00340 | Loss 0.2921 | Train Acc. 0.9001 |

Epoch 00345 | Loss 0.2989 | Train Acc. 0.8978 |

Epoch 00350 | Loss 0.2840 | Train Acc. 0.9048 |

Epoch 00355 | Loss 0.2983 | Train Acc. 0.8966 |

Epoch 00360 | Loss 0.2875 | Train Acc. 0.8990 |

Epoch 00365 | Loss 0.2844 | Train Acc. 0.8955 |

Epoch 00370 | Loss 0.2860 | Train Acc. 0.9036 |

Epoch 00375 | Loss 0.2943 | Train Acc. 0.8931 |

Epoch 00380 | Loss 0.2879 | Train Acc. 0.9001 |

Epoch 00385 | Loss 0.3094 | Train Acc. 0.8850 |

Epoch 00390 | Loss 0.2942 | Train Acc. 0.8966 |

Early stopping! No improvement for 100 consecutive epochs.

Fold : 2 | Test Accuracy = 0.8372 | F1 = 0.7946

Total = 42.4Gb Reserved = 0.8Gb Allocated = 0.4Gb

Clearing gpu memory

Total = 42.4Gb Reserved = 0.4Gb Allocated = 0.3Gb

GCN_MME(

(encoder_dims): ModuleList(

(0): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=464, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

(1): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=29995, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

)

(gnnlayers): ModuleList(

(0): GraphConv(in=64, out=32, normalization=both, activation=None)

(1): GraphConv(in=32, out=5, normalization=both, activation=None)

)

(batch_norms): ModuleList(

(0): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

Graph(num_nodes=1076, num_edges=1076,

ndata_schemes={'feat': Scheme(shape=(30459,), dtype=torch.float32), 'label': Scheme(shape=(5,), dtype=torch.int64)}

edata_schemes={})

Epoch 00000 | Loss 2.5630 | Train Acc. 0.2230 |

Epoch 00005 | Loss 1.8808 | Train Acc. 0.3624 |

Epoch 00010 | Loss 1.5316 | Train Acc. 0.4460 |

Epoch 00015 | Loss 1.2690 | Train Acc. 0.5366 |

Epoch 00020 | Loss 1.1587 | Train Acc. 0.5900 |

Epoch 00025 | Loss 1.0651 | Train Acc. 0.6330 |

Epoch 00030 | Loss 1.0574 | Train Acc. 0.6307 |

Epoch 00035 | Loss 0.8955 | Train Acc. 0.7015 |

Epoch 00040 | Loss 0.8131 | Train Acc. 0.7189 |

Epoch 00045 | Loss 0.7046 | Train Acc. 0.7642 |

Epoch 00050 | Loss 0.7225 | Train Acc. 0.7573 |

Epoch 00055 | Loss 0.6538 | Train Acc. 0.7863 |

Epoch 00060 | Loss 0.6638 | Train Acc. 0.7770 |

Epoch 00065 | Loss 0.6497 | Train Acc. 0.7886 |

Epoch 00070 | Loss 0.5605 | Train Acc. 0.8153 |

Epoch 00075 | Loss 0.6079 | Train Acc. 0.7944 |

Epoch 00080 | Loss 0.5644 | Train Acc. 0.8211 |

Epoch 00085 | Loss 0.5524 | Train Acc. 0.8211 |

Epoch 00090 | Loss 0.5348 | Train Acc. 0.8304 |

Epoch 00095 | Loss 0.5440 | Train Acc. 0.8304 |

Epoch 00100 | Loss 0.4620 | Train Acc. 0.8513 |

Epoch 00105 | Loss 0.4549 | Train Acc. 0.8606 |

Epoch 00110 | Loss 0.4388 | Train Acc. 0.8630 |

Epoch 00115 | Loss 0.4529 | Train Acc. 0.8560 |

Epoch 00120 | Loss 0.4734 | Train Acc. 0.8374 |

Epoch 00125 | Loss 0.4383 | Train Acc. 0.8560 |

Epoch 00130 | Loss 0.4155 | Train Acc. 0.8606 |

Epoch 00135 | Loss 0.4248 | Train Acc. 0.8664 |

Epoch 00140 | Loss 0.3996 | Train Acc. 0.8676 |

Epoch 00145 | Loss 0.3981 | Train Acc. 0.8792 |

Epoch 00150 | Loss 0.3966 | Train Acc. 0.8676 |

Epoch 00155 | Loss 0.3902 | Train Acc. 0.8897 |

Epoch 00160 | Loss 0.3934 | Train Acc. 0.8792 |

Epoch 00165 | Loss 0.3986 | Train Acc. 0.8815 |

Early stopping! No improvement for 20 consecutive epochs.

Pretraining | Loss = 0.6176 | Accuracy = 0.8047

Graph(num_nodes=1076, num_edges=18230,

ndata_schemes={'idx': Scheme(shape=(), dtype=torch.int64), 'label': Scheme(shape=(5,), dtype=torch.int64), 'feat': Scheme(shape=(30459,), dtype=torch.float32)}

edata_schemes={})

Epoch 00000 | Loss 1.6864 | Train Acc. 0.2741 |

Epoch 00005 | Loss 0.6581 | Train Acc. 0.7921 |

Epoch 00010 | Loss 0.5399 | Train Acc. 0.8455 |

Epoch 00015 | Loss 0.4712 | Train Acc. 0.8571 |

Epoch 00020 | Loss 0.4702 | Train Acc. 0.8502 |

Epoch 00025 | Loss 0.4411 | Train Acc. 0.8455 |

Epoch 00030 | Loss 0.4082 | Train Acc. 0.8630 |

Epoch 00035 | Loss 0.3969 | Train Acc. 0.8653 |

Epoch 00040 | Loss 0.3749 | Train Acc. 0.8688 |

Epoch 00045 | Loss 0.3886 | Train Acc. 0.8525 |

Epoch 00050 | Loss 0.3660 | Train Acc. 0.8711 |

Epoch 00055 | Loss 0.3625 | Train Acc. 0.8653 |

Epoch 00060 | Loss 0.3531 | Train Acc. 0.8757 |

Epoch 00065 | Loss 0.3609 | Train Acc. 0.8734 |

Epoch 00070 | Loss 0.3577 | Train Acc. 0.8804 |

Epoch 00075 | Loss 0.3474 | Train Acc. 0.8804 |

Epoch 00080 | Loss 0.3488 | Train Acc. 0.8804 |

Epoch 00085 | Loss 0.3427 | Train Acc. 0.8746 |

Epoch 00090 | Loss 0.3558 | Train Acc. 0.8722 |

Epoch 00095 | Loss 0.3458 | Train Acc. 0.8769 |

Epoch 00100 | Loss 0.3353 | Train Acc. 0.8722 |

Epoch 00105 | Loss 0.3427 | Train Acc. 0.8699 |

Epoch 00110 | Loss 0.3438 | Train Acc. 0.8769 |

Epoch 00115 | Loss 0.3389 | Train Acc. 0.8699 |

Epoch 00120 | Loss 0.3431 | Train Acc. 0.8780 |

Epoch 00125 | Loss 0.3519 | Train Acc. 0.8711 |

Epoch 00130 | Loss 0.3258 | Train Acc. 0.8850 |

Epoch 00135 | Loss 0.3377 | Train Acc. 0.8780 |

Epoch 00140 | Loss 0.3361 | Train Acc. 0.8711 |

Epoch 00145 | Loss 0.3312 | Train Acc. 0.8780 |

Epoch 00150 | Loss 0.3216 | Train Acc. 0.8839 |

Epoch 00155 | Loss 0.3281 | Train Acc. 0.8792 |

Epoch 00160 | Loss 0.3251 | Train Acc. 0.8815 |

Epoch 00165 | Loss 0.3186 | Train Acc. 0.8908 |

Epoch 00170 | Loss 0.3133 | Train Acc. 0.8943 |

Epoch 00175 | Loss 0.3264 | Train Acc. 0.8839 |

Epoch 00180 | Loss 0.3103 | Train Acc. 0.8885 |

Epoch 00185 | Loss 0.3149 | Train Acc. 0.8943 |

Epoch 00190 | Loss 0.3294 | Train Acc. 0.8711 |

Epoch 00195 | Loss 0.3153 | Train Acc. 0.8827 |

Epoch 00200 | Loss 0.3246 | Train Acc. 0.8769 |

Epoch 00205 | Loss 0.3176 | Train Acc. 0.8850 |

Epoch 00210 | Loss 0.3259 | Train Acc. 0.8769 |

Epoch 00215 | Loss 0.3205 | Train Acc. 0.8862 |

Epoch 00220 | Loss 0.3239 | Train Acc. 0.8711 |

Epoch 00225 | Loss 0.3109 | Train Acc. 0.8931 |

Epoch 00230 | Loss 0.3190 | Train Acc. 0.8862 |

Epoch 00235 | Loss 0.3157 | Train Acc. 0.8862 |

Epoch 00240 | Loss 0.3109 | Train Acc. 0.8792 |

Epoch 00245 | Loss 0.3135 | Train Acc. 0.8804 |

Epoch 00250 | Loss 0.3161 | Train Acc. 0.8792 |

Epoch 00255 | Loss 0.3162 | Train Acc. 0.8885 |

Epoch 00260 | Loss 0.3211 | Train Acc. 0.8885 |

Epoch 00265 | Loss 0.3167 | Train Acc. 0.8804 |

Epoch 00270 | Loss 0.3175 | Train Acc. 0.8920 |

Epoch 00275 | Loss 0.3173 | Train Acc. 0.8839 |

Epoch 00280 | Loss 0.3167 | Train Acc. 0.8839 |

Epoch 00285 | Loss 0.3212 | Train Acc. 0.8780 |

Epoch 00290 | Loss 0.3240 | Train Acc. 0.8827 |

Epoch 00295 | Loss 0.3260 | Train Acc. 0.8734 |

Epoch 00300 | Loss 0.3132 | Train Acc. 0.8815 |

Epoch 00305 | Loss 0.3084 | Train Acc. 0.8839 |

Epoch 00310 | Loss 0.3271 | Train Acc. 0.8734 |

Epoch 00315 | Loss 0.3165 | Train Acc. 0.8839 |

Epoch 00320 | Loss 0.3061 | Train Acc. 0.8885 |

Epoch 00325 | Loss 0.3137 | Train Acc. 0.8862 |

Epoch 00330 | Loss 0.3171 | Train Acc. 0.8873 |

Epoch 00335 | Loss 0.3195 | Train Acc. 0.8757 |

Epoch 00340 | Loss 0.3146 | Train Acc. 0.8827 |

Epoch 00345 | Loss 0.2976 | Train Acc. 0.8839 |

Epoch 00350 | Loss 0.3170 | Train Acc. 0.8792 |

Epoch 00355 | Loss 0.3127 | Train Acc. 0.8839 |

Epoch 00360 | Loss 0.3137 | Train Acc. 0.8908 |

Epoch 00365 | Loss 0.3096 | Train Acc. 0.8815 |

Epoch 00370 | Loss 0.3100 | Train Acc. 0.8792 |

Epoch 00375 | Loss 0.3078 | Train Acc. 0.8804 |

Epoch 00380 | Loss 0.3011 | Train Acc. 0.8839 |

Epoch 00385 | Loss 0.3158 | Train Acc. 0.8873 |

Epoch 00390 | Loss 0.3215 | Train Acc. 0.8804 |

Epoch 00395 | Loss 0.3252 | Train Acc. 0.8804 |

Epoch 00400 | Loss 0.3113 | Train Acc. 0.8873 |

Epoch 00405 | Loss 0.3120 | Train Acc. 0.8815 |

Epoch 00410 | Loss 0.3148 | Train Acc. 0.8815 |

Epoch 00415 | Loss 0.3104 | Train Acc. 0.8815 |

Epoch 00420 | Loss 0.3188 | Train Acc. 0.8885 |

Epoch 00425 | Loss 0.3327 | Train Acc. 0.8722 |

Epoch 00430 | Loss 0.3221 | Train Acc. 0.8873 |

Epoch 00435 | Loss 0.3057 | Train Acc. 0.8908 |

Epoch 00440 | Loss 0.3168 | Train Acc. 0.8862 |

Epoch 00445 | Loss 0.3176 | Train Acc. 0.8897 |

Early stopping! No improvement for 100 consecutive epochs.

Fold : 3 | Test Accuracy = 0.8140 | F1 = 0.7819

Total = 42.4Gb Reserved = 0.8Gb Allocated = 0.4Gb

Clearing gpu memory

Total = 42.4Gb Reserved = 0.4Gb Allocated = 0.3Gb

GCN_MME(

(encoder_dims): ModuleList(

(0): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=464, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

(1): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=29995, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

)

(gnnlayers): ModuleList(

(0): GraphConv(in=64, out=32, normalization=both, activation=None)

(1): GraphConv(in=32, out=5, normalization=both, activation=None)

)

(batch_norms): ModuleList(

(0): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

Graph(num_nodes=1076, num_edges=1076,

ndata_schemes={'feat': Scheme(shape=(30459,), dtype=torch.float32), 'label': Scheme(shape=(5,), dtype=torch.int64)}

edata_schemes={})

Epoch 00000 | Loss 2.4323 | Train Acc. 0.2253 |

Epoch 00005 | Loss 1.6332 | Train Acc. 0.3740 |

Epoch 00010 | Loss 1.3681 | Train Acc. 0.4762 |

Epoch 00015 | Loss 1.2353 | Train Acc. 0.5122 |

Epoch 00020 | Loss 1.1013 | Train Acc. 0.5947 |

Epoch 00025 | Loss 1.0389 | Train Acc. 0.6086 |

Epoch 00030 | Loss 0.9564 | Train Acc. 0.6725 |

Epoch 00035 | Loss 0.8490 | Train Acc. 0.6887 |

Epoch 00040 | Loss 0.7801 | Train Acc. 0.7480 |

Epoch 00045 | Loss 0.7051 | Train Acc. 0.7549 |

Epoch 00050 | Loss 0.6436 | Train Acc. 0.7944 |

Epoch 00055 | Loss 0.6092 | Train Acc. 0.7956 |

Epoch 00060 | Loss 0.6106 | Train Acc. 0.7956 |

Epoch 00065 | Loss 0.5653 | Train Acc. 0.8002 |

Epoch 00070 | Loss 0.5792 | Train Acc. 0.8084 |

Epoch 00075 | Loss 0.5333 | Train Acc. 0.8281 |

Epoch 00080 | Loss 0.4759 | Train Acc. 0.8444 |

Epoch 00085 | Loss 0.5099 | Train Acc. 0.8269 |

Epoch 00090 | Loss 0.5205 | Train Acc. 0.8351 |

Epoch 00095 | Loss 0.4588 | Train Acc. 0.8583 |

Epoch 00100 | Loss 0.4990 | Train Acc. 0.8223 |

Epoch 00105 | Loss 0.4702 | Train Acc. 0.8397 |

Epoch 00110 | Loss 0.4292 | Train Acc. 0.8734 |

Epoch 00115 | Loss 0.4084 | Train Acc. 0.8630 |

Epoch 00120 | Loss 0.4335 | Train Acc. 0.8571 |

Epoch 00125 | Loss 0.4358 | Train Acc. 0.8595 |

Epoch 00130 | Loss 0.4137 | Train Acc. 0.8757 |

Epoch 00135 | Loss 0.3874 | Train Acc. 0.8664 |

Epoch 00140 | Loss 0.3741 | Train Acc. 0.8839 |

Epoch 00145 | Loss 0.4107 | Train Acc. 0.8618 |

Epoch 00150 | Loss 0.3582 | Train Acc. 0.8897 |

Epoch 00155 | Loss 0.3689 | Train Acc. 0.8827 |

Epoch 00160 | Loss 0.3658 | Train Acc. 0.8815 |

Epoch 00165 | Loss 0.3591 | Train Acc. 0.8804 |

Epoch 00170 | Loss 0.3615 | Train Acc. 0.8815 |

Epoch 00175 | Loss 0.3620 | Train Acc. 0.8943 |

Epoch 00180 | Loss 0.3415 | Train Acc. 0.8804 |

Epoch 00185 | Loss 0.3578 | Train Acc. 0.8885 |

Epoch 00190 | Loss 0.3446 | Train Acc. 0.8885 |

Epoch 00195 | Loss 0.3469 | Train Acc. 0.8943 |

Epoch 00200 | Loss 0.3418 | Train Acc. 0.8978 |

Epoch 00205 | Loss 0.3305 | Train Acc. 0.8862 |

Epoch 00210 | Loss 0.3519 | Train Acc. 0.8943 |

Epoch 00215 | Loss 0.3065 | Train Acc. 0.9106 |

Epoch 00220 | Loss 0.3202 | Train Acc. 0.8990 |

Epoch 00225 | Loss 0.3007 | Train Acc. 0.9106 |

Epoch 00230 | Loss 0.3278 | Train Acc. 0.8955 |

Early stopping! No improvement for 20 consecutive epochs.

Pretraining | Loss = 0.5246 | Accuracy = 0.8465

Graph(num_nodes=1076, num_edges=18106,

ndata_schemes={'idx': Scheme(shape=(), dtype=torch.int64), 'label': Scheme(shape=(5,), dtype=torch.int64), 'feat': Scheme(shape=(30459,), dtype=torch.float32)}

edata_schemes={})

Epoch 00000 | Loss 2.0896 | Train Acc. 0.1638 |

Epoch 00005 | Loss 0.7488 | Train Acc. 0.7735 |

Epoch 00010 | Loss 0.6079 | Train Acc. 0.8177 |

Epoch 00015 | Loss 0.5355 | Train Acc. 0.8351 |

Epoch 00020 | Loss 0.4685 | Train Acc. 0.8525 |

Epoch 00025 | Loss 0.4448 | Train Acc. 0.8653 |

Epoch 00030 | Loss 0.4276 | Train Acc. 0.8502 |

Epoch 00035 | Loss 0.4055 | Train Acc. 0.8571 |

Epoch 00040 | Loss 0.3865 | Train Acc. 0.8711 |

Epoch 00045 | Loss 0.3677 | Train Acc. 0.8780 |

Epoch 00050 | Loss 0.3576 | Train Acc. 0.8780 |

Epoch 00055 | Loss 0.3685 | Train Acc. 0.8699 |

Epoch 00060 | Loss 0.3516 | Train Acc. 0.8688 |

Epoch 00065 | Loss 0.3483 | Train Acc. 0.8827 |

Epoch 00070 | Loss 0.3499 | Train Acc. 0.8746 |

Epoch 00075 | Loss 0.3465 | Train Acc. 0.8664 |

Epoch 00080 | Loss 0.3660 | Train Acc. 0.8780 |

Epoch 00085 | Loss 0.3421 | Train Acc. 0.8873 |

Epoch 00090 | Loss 0.3415 | Train Acc. 0.8885 |

Epoch 00095 | Loss 0.3289 | Train Acc. 0.8862 |

Epoch 00100 | Loss 0.3357 | Train Acc. 0.8839 |

Epoch 00105 | Loss 0.3475 | Train Acc. 0.8688 |

Epoch 00110 | Loss 0.3331 | Train Acc. 0.8804 |

Epoch 00115 | Loss 0.3329 | Train Acc. 0.8769 |

Epoch 00120 | Loss 0.3337 | Train Acc. 0.8722 |

Epoch 00125 | Loss 0.3495 | Train Acc. 0.8792 |

Epoch 00130 | Loss 0.3340 | Train Acc. 0.8815 |

Epoch 00135 | Loss 0.3253 | Train Acc. 0.8839 |

Epoch 00140 | Loss 0.3270 | Train Acc. 0.8757 |

Epoch 00145 | Loss 0.3186 | Train Acc. 0.8908 |

Epoch 00150 | Loss 0.3260 | Train Acc. 0.8850 |

Epoch 00155 | Loss 0.3297 | Train Acc. 0.8815 |

Epoch 00160 | Loss 0.3337 | Train Acc. 0.8827 |

Epoch 00165 | Loss 0.3177 | Train Acc. 0.8873 |

Epoch 00170 | Loss 0.3279 | Train Acc. 0.8676 |

Epoch 00175 | Loss 0.3294 | Train Acc. 0.8862 |

Epoch 00180 | Loss 0.3241 | Train Acc. 0.8839 |

Epoch 00185 | Loss 0.3197 | Train Acc. 0.8780 |

Epoch 00190 | Loss 0.3149 | Train Acc. 0.8792 |

Epoch 00195 | Loss 0.3210 | Train Acc. 0.8862 |

Epoch 00200 | Loss 0.3195 | Train Acc. 0.8827 |

Epoch 00205 | Loss 0.3319 | Train Acc. 0.8699 |

Epoch 00210 | Loss 0.3154 | Train Acc. 0.8862 |

Epoch 00215 | Loss 0.3103 | Train Acc. 0.8862 |

Epoch 00220 | Loss 0.3072 | Train Acc. 0.8885 |

Epoch 00225 | Loss 0.3322 | Train Acc. 0.8769 |

Epoch 00230 | Loss 0.3157 | Train Acc. 0.8839 |

Epoch 00235 | Loss 0.3139 | Train Acc. 0.8792 |

Epoch 00240 | Loss 0.3191 | Train Acc. 0.8850 |

Epoch 00245 | Loss 0.3115 | Train Acc. 0.8804 |

Epoch 00250 | Loss 0.3218 | Train Acc. 0.8757 |

Epoch 00255 | Loss 0.3172 | Train Acc. 0.8827 |

Epoch 00260 | Loss 0.3102 | Train Acc. 0.8757 |

Epoch 00265 | Loss 0.3145 | Train Acc. 0.8815 |

Epoch 00270 | Loss 0.3251 | Train Acc. 0.8897 |

Epoch 00275 | Loss 0.3105 | Train Acc. 0.8897 |

Epoch 00280 | Loss 0.3158 | Train Acc. 0.8862 |

Epoch 00285 | Loss 0.3258 | Train Acc. 0.8827 |

Epoch 00290 | Loss 0.3235 | Train Acc. 0.8873 |

Epoch 00295 | Loss 0.3188 | Train Acc. 0.8850 |

Epoch 00300 | Loss 0.3155 | Train Acc. 0.8815 |

Epoch 00305 | Loss 0.3126 | Train Acc. 0.8908 |

Epoch 00310 | Loss 0.3019 | Train Acc. 0.8873 |

Epoch 00315 | Loss 0.3163 | Train Acc. 0.8885 |

Epoch 00320 | Loss 0.3173 | Train Acc. 0.8850 |

Epoch 00325 | Loss 0.3246 | Train Acc. 0.8873 |

Epoch 00330 | Loss 0.3157 | Train Acc. 0.8850 |

Epoch 00335 | Loss 0.3163 | Train Acc. 0.8827 |

Epoch 00340 | Loss 0.3094 | Train Acc. 0.8908 |

Epoch 00345 | Loss 0.3105 | Train Acc. 0.8850 |

Epoch 00350 | Loss 0.3229 | Train Acc. 0.8873 |

Early stopping! No improvement for 100 consecutive epochs.

Fold : 4 | Test Accuracy = 0.8000 | F1 = 0.7615

Total = 42.4Gb Reserved = 0.8Gb Allocated = 0.4Gb

Clearing gpu memory

Total = 42.4Gb Reserved = 0.4Gb Allocated = 0.3Gb

GCN_MME(

(encoder_dims): ModuleList(

(0): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=464, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

(1): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=29995, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

)

(gnnlayers): ModuleList(

(0): GraphConv(in=64, out=32, normalization=both, activation=None)

(1): GraphConv(in=32, out=5, normalization=both, activation=None)

)

(batch_norms): ModuleList(

(0): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

Graph(num_nodes=1076, num_edges=1076,

ndata_schemes={'feat': Scheme(shape=(30459,), dtype=torch.float32), 'label': Scheme(shape=(5,), dtype=torch.int64)}

edata_schemes={})

Epoch 00000 | Loss 2.5078 | Train Acc. 0.2253 |

Epoch 00005 | Loss 1.6258 | Train Acc. 0.4077 |

Epoch 00010 | Loss 1.4283 | Train Acc. 0.4646 |

Epoch 00015 | Loss 1.1862 | Train Acc. 0.5738 |

Epoch 00020 | Loss 1.0619 | Train Acc. 0.6179 |

Epoch 00025 | Loss 0.9455 | Train Acc. 0.6481 |

Epoch 00030 | Loss 0.8999 | Train Acc. 0.6957 |

Epoch 00035 | Loss 0.7717 | Train Acc. 0.7515 |

Epoch 00040 | Loss 0.7674 | Train Acc. 0.7549 |

Epoch 00045 | Loss 0.7086 | Train Acc. 0.7573 |

Epoch 00050 | Loss 0.6650 | Train Acc. 0.7851 |

Epoch 00055 | Loss 0.5958 | Train Acc. 0.8211 |

Epoch 00060 | Loss 0.5926 | Train Acc. 0.8049 |

Epoch 00065 | Loss 0.5426 | Train Acc. 0.8211 |

Epoch 00070 | Loss 0.5750 | Train Acc. 0.8258 |

Epoch 00075 | Loss 0.5345 | Train Acc. 0.8328 |

Epoch 00080 | Loss 0.5310 | Train Acc. 0.8328 |

Epoch 00085 | Loss 0.5175 | Train Acc. 0.8409 |

Epoch 00090 | Loss 0.4949 | Train Acc. 0.8525 |

Epoch 00095 | Loss 0.4740 | Train Acc. 0.8432 |

Epoch 00100 | Loss 0.4516 | Train Acc. 0.8548 |

Epoch 00105 | Loss 0.4347 | Train Acc. 0.8479 |

Epoch 00110 | Loss 0.4613 | Train Acc. 0.8525 |

Epoch 00115 | Loss 0.4397 | Train Acc. 0.8606 |

Epoch 00120 | Loss 0.3992 | Train Acc. 0.8746 |

Epoch 00125 | Loss 0.4605 | Train Acc. 0.8502 |

Epoch 00130 | Loss 0.4237 | Train Acc. 0.8664 |

Early stopping! No improvement for 20 consecutive epochs.

Pretraining | Loss = 0.6088 | Accuracy = 0.7814

Graph(num_nodes=1076, num_edges=18188,

ndata_schemes={'idx': Scheme(shape=(), dtype=torch.int64), 'label': Scheme(shape=(5,), dtype=torch.int64), 'feat': Scheme(shape=(30459,), dtype=torch.float32)}

edata_schemes={})

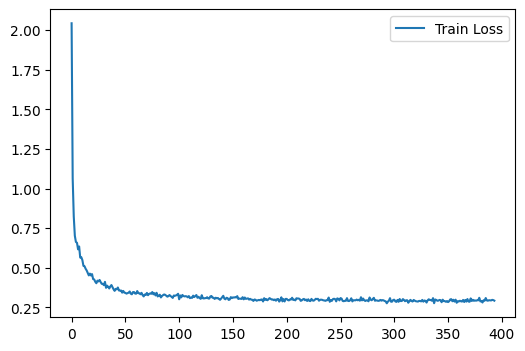

Epoch 00000 | Loss 2.3680 | Train Acc. 0.0418 |

Epoch 00005 | Loss 0.6399 | Train Acc. 0.8118 |

Epoch 00010 | Loss 0.5215 | Train Acc. 0.8328 |

Epoch 00015 | Loss 0.4666 | Train Acc. 0.8571 |

Epoch 00020 | Loss 0.4282 | Train Acc. 0.8688 |

Epoch 00025 | Loss 0.4414 | Train Acc. 0.8560 |

Epoch 00030 | Loss 0.4203 | Train Acc. 0.8630 |

Epoch 00035 | Loss 0.3844 | Train Acc. 0.8757 |

Epoch 00040 | Loss 0.3720 | Train Acc. 0.8711 |

Epoch 00045 | Loss 0.3658 | Train Acc. 0.8699 |

Epoch 00050 | Loss 0.3577 | Train Acc. 0.8792 |

Epoch 00055 | Loss 0.3498 | Train Acc. 0.8920 |

Epoch 00060 | Loss 0.3519 | Train Acc. 0.8699 |

Epoch 00065 | Loss 0.3438 | Train Acc. 0.8827 |

Epoch 00070 | Loss 0.3490 | Train Acc. 0.8780 |

Epoch 00075 | Loss 0.3486 | Train Acc. 0.8815 |

Epoch 00080 | Loss 0.3414 | Train Acc. 0.8792 |

Epoch 00085 | Loss 0.3359 | Train Acc. 0.8862 |

Epoch 00090 | Loss 0.3426 | Train Acc. 0.8850 |

Epoch 00095 | Loss 0.3329 | Train Acc. 0.8839 |

Epoch 00100 | Loss 0.3250 | Train Acc. 0.8897 |

Epoch 00105 | Loss 0.3213 | Train Acc. 0.8978 |

Epoch 00110 | Loss 0.3295 | Train Acc. 0.8792 |

Epoch 00115 | Loss 0.3292 | Train Acc. 0.8908 |

Epoch 00120 | Loss 0.3211 | Train Acc. 0.8943 |

Epoch 00125 | Loss 0.3233 | Train Acc. 0.8931 |

Epoch 00130 | Loss 0.3225 | Train Acc. 0.8897 |

Epoch 00135 | Loss 0.3221 | Train Acc. 0.8908 |

Epoch 00140 | Loss 0.3077 | Train Acc. 0.8966 |

Epoch 00145 | Loss 0.3326 | Train Acc. 0.8931 |

Epoch 00150 | Loss 0.3246 | Train Acc. 0.8908 |

Epoch 00155 | Loss 0.3187 | Train Acc. 0.8966 |

Epoch 00160 | Loss 0.3193 | Train Acc. 0.8920 |

Epoch 00165 | Loss 0.3184 | Train Acc. 0.8955 |

Epoch 00170 | Loss 0.3205 | Train Acc. 0.8839 |

Epoch 00175 | Loss 0.3117 | Train Acc. 0.9001 |

Epoch 00180 | Loss 0.3206 | Train Acc. 0.8862 |

Epoch 00185 | Loss 0.3096 | Train Acc. 0.8862 |

Epoch 00190 | Loss 0.3172 | Train Acc. 0.8920 |

Epoch 00195 | Loss 0.3100 | Train Acc. 0.8955 |

Epoch 00200 | Loss 0.3013 | Train Acc. 0.8931 |

Epoch 00205 | Loss 0.3070 | Train Acc. 0.8920 |

Epoch 00210 | Loss 0.3052 | Train Acc. 0.8955 |

Epoch 00215 | Loss 0.3009 | Train Acc. 0.9013 |

Epoch 00220 | Loss 0.3192 | Train Acc. 0.8885 |

Epoch 00225 | Loss 0.3259 | Train Acc. 0.8920 |

Epoch 00230 | Loss 0.3233 | Train Acc. 0.8931 |

Epoch 00235 | Loss 0.3032 | Train Acc. 0.8990 |

Epoch 00240 | Loss 0.3064 | Train Acc. 0.8931 |

Epoch 00245 | Loss 0.3068 | Train Acc. 0.8978 |

Epoch 00250 | Loss 0.3088 | Train Acc. 0.8931 |

Epoch 00255 | Loss 0.2965 | Train Acc. 0.9024 |

Epoch 00260 | Loss 0.3132 | Train Acc. 0.8885 |

Epoch 00265 | Loss 0.3297 | Train Acc. 0.8815 |

Epoch 00270 | Loss 0.3106 | Train Acc. 0.9001 |

Epoch 00275 | Loss 0.3021 | Train Acc. 0.9001 |

Epoch 00280 | Loss 0.3143 | Train Acc. 0.8862 |

Epoch 00285 | Loss 0.2998 | Train Acc. 0.8966 |

Epoch 00290 | Loss 0.3038 | Train Acc. 0.9024 |

Epoch 00295 | Loss 0.3002 | Train Acc. 0.8943 |

Epoch 00300 | Loss 0.3040 | Train Acc. 0.8966 |

Epoch 00305 | Loss 0.3033 | Train Acc. 0.9024 |

Epoch 00310 | Loss 0.3083 | Train Acc. 0.8885 |

Epoch 00315 | Loss 0.3040 | Train Acc. 0.8990 |

Early stopping! No improvement for 100 consecutive epochs.

Fold : 5 | Test Accuracy = 0.8279 | F1 = 0.7975

Total = 42.4Gb Reserved = 0.8Gb Allocated = 0.4Gb

Clearing gpu memory

Total = 42.4Gb Reserved = 0.4Gb Allocated = 0.3Gb

5 Fold Cross Validation Accuracy = 82.90 ± 2.23

5 Fold Cross Validation F1 = 79.85 ± 3.19