MOGDx

This notebook shows a quick examplar of the original MOGDx

import pandas as pd

import numpy as np

import os

import sys

sys.path.insert(0 , './../')

from MAIN.utils import *

from MAIN.train import *

import MAIN.preprocess_functions

from MAIN.GNN_MME import GCN_MME , GSage_MME , GAT_MME

import torch

import torch.nn.functional as F

import dgl

from dgl.dataloading import MultiLayerFullNeighborSampler

import matplotlib.pyplot as plt

from sklearn.model_selection import StratifiedKFold

import networkx as nx

from datetime import datetime

import joblib

import warnings

import gc

import copy

warnings.filterwarnings("ignore")

print("Finished Library Import \n")

Finished Library Import

data_input = './../../data/TCGA/BRCA/raw'

snf_net = 'RPPA_mRNA_graph.graphml'

index_col = 'index'

target = 'paper_BRCA_Subtype_PAM50'

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')# Get GPU device name, else use CPU

print("Using %s device" % device)

get_gpu_memory()

datModalities , meta = data_parsing(data_input , ['RPPA', 'mRNA'] , target , index_col)

graph_file = data_input + '/../Networks/' + snf_net

g = nx.read_graphml(graph_file)

meta = meta.loc[sorted(meta.index)]

label = F.one_hot(torch.Tensor(list(meta.astype('category').cat.codes)).to(torch.int64))

skf = StratifiedKFold(n_splits=5 , shuffle=True)

print(skf)

MME_input_shapes = [ datModalities[mod].shape[1] for mod in datModalities]

h = reduce(merge_dfs , list(datModalities.values()))

h = h.loc[sorted(h.index)]

g = dgl.from_networkx(g , node_attrs=['idx' , 'label'])

g.ndata['feat'] = torch.Tensor(h.to_numpy())

g.ndata['label'] = label

del datModalities

gc.collect()

output_metrics = []

test_logits = []

test_labels = []

for i, (train_index, test_index) in enumerate(skf.split(meta.index, meta)) :

model = GCN_MME(MME_input_shapes , [16 , 16] , 64 , [32], len(meta.unique())).to(device)

print(model)

print(g)

g = g.to(device)

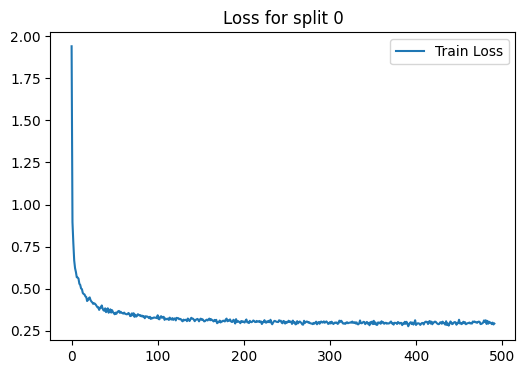

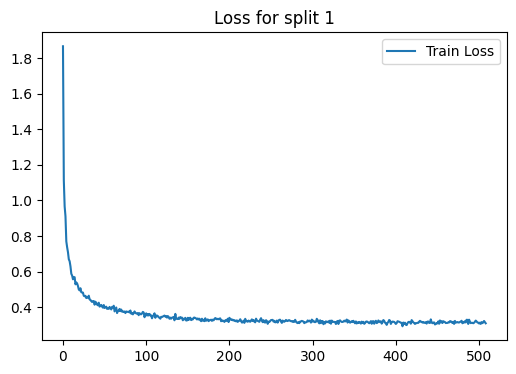

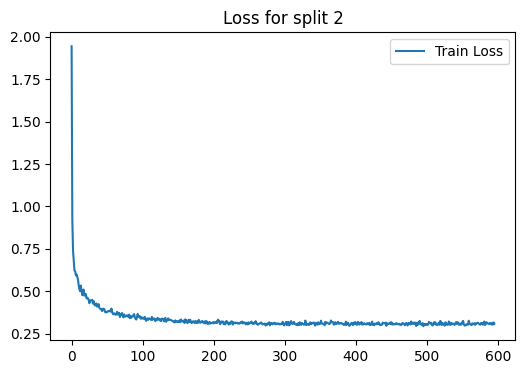

loss_plot = train(g, train_index, device , model , label , 2000 , 1e-3 , 100)

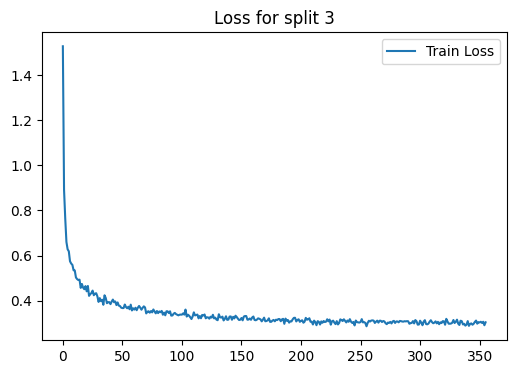

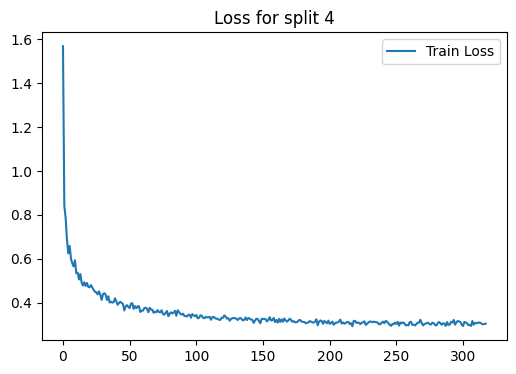

plt.title(f'Loss for split {i}')

plt.show()

plt.clf()

sampler = NeighborSampler(

[15 for i in range(len(model.gnnlayers))], # fanout for each layer

prefetch_node_feats=['feat'],

prefetch_labels=['label'],

)

test_dataloader = DataLoader(

g,

torch.Tensor(test_index).to(torch.int64).to(device),

sampler,

device=device,

batch_size=1024,

shuffle=True,

drop_last=False,

num_workers=0,

use_uva=False,

)

test_output_metrics = evaluate(model , g, test_dataloader)

print(

"Fold : {:01d} | Test Accuracy = {:.4f} | F1 = {:.4f} ".format(

i+1 , test_output_metrics[1] , test_output_metrics[2] )

)

test_logits.extend(test_output_metrics[-2])

test_labels.extend(test_output_metrics[-1])

output_metrics.append(test_output_metrics)

if i == 0 :

best_model = model

best_idx = i

elif output_metrics[best_idx][1] < test_output_metrics[1] :

best_model = model

best_idx = i

get_gpu_memory()

del model

gc.collect()

torch.cuda.empty_cache()

print('Clearing gpu memory')

get_gpu_memory()

test_logits = torch.stack(test_logits)

test_labels = torch.stack(test_labels)

accuracy = []

F1 = []

i = 0

for metric in output_metrics :

accuracy.append(metric[1])

F1.append(metric[2])

print("%i Fold Cross Validation Accuracy = %2.2f \u00B1 %2.2f" %(5 , np.mean(accuracy)*100 , np.std(accuracy)*100))

print("%i Fold Cross Validation F1 = %2.2f \u00B1 %2.2f" %(5 , np.mean(F1)*100 , np.std(F1)*100))

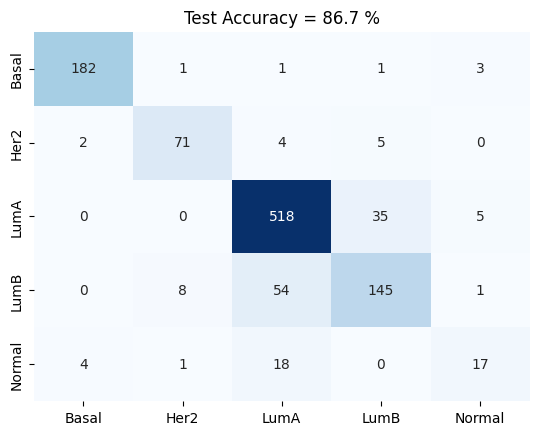

confusion_matrix(test_logits , test_labels , meta.astype('category').cat.categories)

plt.title('Test Accuracy = %2.1f %%' % (np.mean(accuracy)*100))

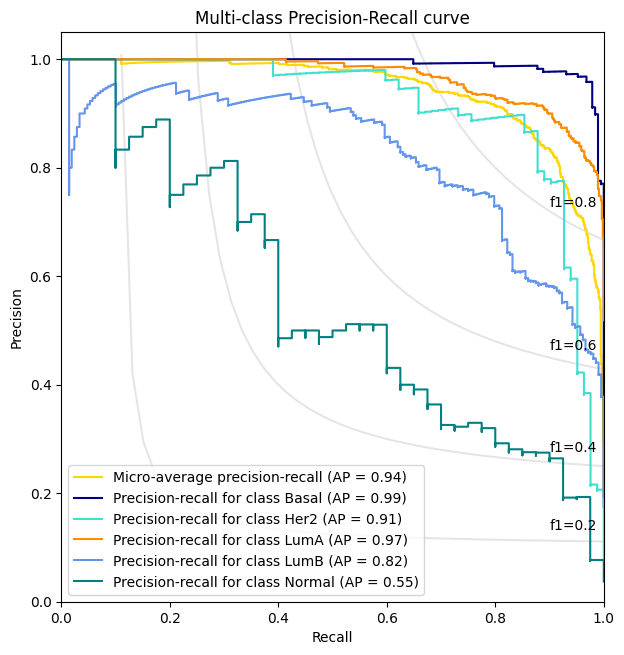

precision_recall_plot , all_predictions_conf = AUROC(test_logits, test_labels , meta)

node_predictions = []

node_true = []

display_label = meta.astype('category').cat.categories

for pred , true in zip(all_predictions_conf.argmax(1) , list(test_labels.detach().cpu().argmax(1).numpy())) :

node_predictions.append(display_label[pred])

node_true.append(display_label[true])

tst = pd.DataFrame({'Actual' : node_true , 'Predicted' : node_predictions})

Using cuda device

Total = 42.4Gb Reserved = 0.0Gb Allocated = 0.0Gb

StratifiedKFold(n_splits=5, random_state=None, shuffle=True)

GCN_MME(

(encoder_dims): ModuleList(

(0): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=464, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

(1): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=29995, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

)

(gnnlayers): ModuleList(

(0): GraphConv(in=64, out=32, normalization=both, activation=None)

(1): GraphConv(in=32, out=5, normalization=both, activation=None)

)

(batch_norms): ModuleList(

(0): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

Graph(num_nodes=1076, num_edges=18300,

ndata_schemes={'idx': Scheme(shape=(), dtype=torch.int64), 'label': Scheme(shape=(5,), dtype=torch.int64), 'feat': Scheme(shape=(30459,), dtype=torch.float32)}

edata_schemes={})

Epoch 00000 | Loss 1.9389 | Train Acc. 0.1581 |

Epoch 00005 | Loss 0.5994 | Train Acc. 0.8151 |

Epoch 00010 | Loss 0.5230 | Train Acc. 0.8198 |

Epoch 00015 | Loss 0.4665 | Train Acc. 0.8395 |

Epoch 00020 | Loss 0.4435 | Train Acc. 0.8384 |

Epoch 00025 | Loss 0.4107 | Train Acc. 0.8349 |

Epoch 00030 | Loss 0.3904 | Train Acc. 0.8547 |

Epoch 00035 | Loss 0.4012 | Train Acc. 0.8430 |

Epoch 00040 | Loss 0.3613 | Train Acc. 0.8628 |

Epoch 00045 | Loss 0.3781 | Train Acc. 0.8488 |

Epoch 00050 | Loss 0.3490 | Train Acc. 0.8721 |

Epoch 00055 | Loss 0.3688 | Train Acc. 0.8535 |

Epoch 00060 | Loss 0.3526 | Train Acc. 0.8640 |

Epoch 00065 | Loss 0.3498 | Train Acc. 0.8640 |

Epoch 00070 | Loss 0.3541 | Train Acc. 0.8674 |

Epoch 00075 | Loss 0.3350 | Train Acc. 0.8640 |

Epoch 00080 | Loss 0.3434 | Train Acc. 0.8651 |

Epoch 00085 | Loss 0.3254 | Train Acc. 0.8709 |

Epoch 00090 | Loss 0.3255 | Train Acc. 0.8733 |

Epoch 00095 | Loss 0.3286 | Train Acc. 0.8651 |

Epoch 00100 | Loss 0.3429 | Train Acc. 0.8663 |

Epoch 00105 | Loss 0.3275 | Train Acc. 0.8605 |

Epoch 00110 | Loss 0.3197 | Train Acc. 0.8744 |

Epoch 00115 | Loss 0.3199 | Train Acc. 0.8756 |

Epoch 00120 | Loss 0.3241 | Train Acc. 0.8709 |

Epoch 00125 | Loss 0.3193 | Train Acc. 0.8744 |

Epoch 00130 | Loss 0.3171 | Train Acc. 0.8756 |

Epoch 00135 | Loss 0.3236 | Train Acc. 0.8756 |

Epoch 00140 | Loss 0.3232 | Train Acc. 0.8686 |

Epoch 00145 | Loss 0.3175 | Train Acc. 0.8721 |

Epoch 00150 | Loss 0.3231 | Train Acc. 0.8663 |

Epoch 00155 | Loss 0.3083 | Train Acc. 0.8802 |

Epoch 00160 | Loss 0.3232 | Train Acc. 0.8744 |

Epoch 00165 | Loss 0.3062 | Train Acc. 0.8779 |

Epoch 00170 | Loss 0.3017 | Train Acc. 0.8814 |

Epoch 00175 | Loss 0.3058 | Train Acc. 0.8674 |

Epoch 00180 | Loss 0.3232 | Train Acc. 0.8756 |

Epoch 00185 | Loss 0.3074 | Train Acc. 0.8674 |

Epoch 00190 | Loss 0.2939 | Train Acc. 0.8802 |

Epoch 00195 | Loss 0.3036 | Train Acc. 0.8779 |

Epoch 00200 | Loss 0.3026 | Train Acc. 0.8721 |

Epoch 00205 | Loss 0.3016 | Train Acc. 0.8767 |

Epoch 00210 | Loss 0.3055 | Train Acc. 0.8756 |

Epoch 00215 | Loss 0.3094 | Train Acc. 0.8744 |

Epoch 00220 | Loss 0.3060 | Train Acc. 0.8791 |

Epoch 00225 | Loss 0.3011 | Train Acc. 0.8709 |

Epoch 00230 | Loss 0.3018 | Train Acc. 0.8721 |

Epoch 00235 | Loss 0.3068 | Train Acc. 0.8674 |

Epoch 00240 | Loss 0.3025 | Train Acc. 0.8791 |

Epoch 00245 | Loss 0.2952 | Train Acc. 0.8837 |

Epoch 00250 | Loss 0.2988 | Train Acc. 0.8849 |

Epoch 00255 | Loss 0.3048 | Train Acc. 0.8849 |

Epoch 00260 | Loss 0.2890 | Train Acc. 0.8767 |

Epoch 00265 | Loss 0.3060 | Train Acc. 0.8709 |

Epoch 00270 | Loss 0.3103 | Train Acc. 0.8767 |

Epoch 00275 | Loss 0.2991 | Train Acc. 0.8884 |

Epoch 00280 | Loss 0.2904 | Train Acc. 0.8814 |

Epoch 00285 | Loss 0.2894 | Train Acc. 0.8756 |

Epoch 00290 | Loss 0.3058 | Train Acc. 0.8814 |

Epoch 00295 | Loss 0.3032 | Train Acc. 0.8779 |

Epoch 00300 | Loss 0.3019 | Train Acc. 0.8849 |

Epoch 00305 | Loss 0.2957 | Train Acc. 0.8826 |

Epoch 00310 | Loss 0.2953 | Train Acc. 0.8919 |

Epoch 00315 | Loss 0.2948 | Train Acc. 0.8849 |

Epoch 00320 | Loss 0.3013 | Train Acc. 0.8802 |

Epoch 00325 | Loss 0.2989 | Train Acc. 0.8826 |

Epoch 00330 | Loss 0.2942 | Train Acc. 0.8791 |

Epoch 00335 | Loss 0.3121 | Train Acc. 0.8721 |

Epoch 00340 | Loss 0.3028 | Train Acc. 0.8744 |

Epoch 00345 | Loss 0.2977 | Train Acc. 0.8837 |

Epoch 00350 | Loss 0.2905 | Train Acc. 0.8826 |

Epoch 00355 | Loss 0.2860 | Train Acc. 0.8884 |

Epoch 00360 | Loss 0.3072 | Train Acc. 0.8802 |

Epoch 00365 | Loss 0.2985 | Train Acc. 0.8744 |

Epoch 00370 | Loss 0.3012 | Train Acc. 0.8744 |

Epoch 00375 | Loss 0.2984 | Train Acc. 0.8721 |

Epoch 00380 | Loss 0.2991 | Train Acc. 0.8814 |

Epoch 00385 | Loss 0.2945 | Train Acc. 0.8791 |

Epoch 00390 | Loss 0.2944 | Train Acc. 0.8860 |

Epoch 00395 | Loss 0.2906 | Train Acc. 0.8802 |

Epoch 00400 | Loss 0.2854 | Train Acc. 0.8953 |

Epoch 00405 | Loss 0.2857 | Train Acc. 0.8942 |

Epoch 00410 | Loss 0.2996 | Train Acc. 0.8814 |

Epoch 00415 | Loss 0.3048 | Train Acc. 0.8826 |

Epoch 00420 | Loss 0.3003 | Train Acc. 0.8756 |

Epoch 00425 | Loss 0.2928 | Train Acc. 0.8884 |

Epoch 00430 | Loss 0.2926 | Train Acc. 0.8826 |

Epoch 00435 | Loss 0.3075 | Train Acc. 0.8733 |

Epoch 00440 | Loss 0.3061 | Train Acc. 0.8767 |

Epoch 00445 | Loss 0.3027 | Train Acc. 0.8756 |

Epoch 00450 | Loss 0.3163 | Train Acc. 0.8744 |

Epoch 00455 | Loss 0.3045 | Train Acc. 0.8791 |

Epoch 00460 | Loss 0.2962 | Train Acc. 0.8884 |

Epoch 00465 | Loss 0.2934 | Train Acc. 0.8767 |

Epoch 00470 | Loss 0.3017 | Train Acc. 0.8721 |

Epoch 00475 | Loss 0.2905 | Train Acc. 0.8802 |

Epoch 00480 | Loss 0.3035 | Train Acc. 0.8756 |

Epoch 00485 | Loss 0.3044 | Train Acc. 0.8802 |

Epoch 00490 | Loss 0.2883 | Train Acc. 0.8814 |

Early stopping! No improvement for 100 consecutive epochs.

Fold : 1 | Test Accuracy = 0.8657 | F1 = 0.8396

Total = 42.4Gb Reserved = 0.6Gb Allocated = 0.3Gb

Clearing gpu memory

Total = 42.4Gb Reserved = 0.3Gb Allocated = 0.3Gb

GCN_MME(

(encoder_dims): ModuleList(

(0): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=464, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

(1): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=29995, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

)

(gnnlayers): ModuleList(

(0): GraphConv(in=64, out=32, normalization=both, activation=None)

(1): GraphConv(in=32, out=5, normalization=both, activation=None)

)

(batch_norms): ModuleList(

(0): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

Graph(num_nodes=1076, num_edges=18300,

ndata_schemes={'idx': Scheme(shape=(), dtype=torch.int64), 'label': Scheme(shape=(5,), dtype=torch.int64), 'feat': Scheme(shape=(30459,), dtype=torch.float32)}

edata_schemes={})

Epoch 00000 | Loss 1.8679 | Train Acc. 0.1301 |

Epoch 00005 | Loss 0.7363 | Train Acc. 0.7921 |

Epoch 00010 | Loss 0.5875 | Train Acc. 0.8165 |

Epoch 00015 | Loss 0.5280 | Train Acc. 0.8339 |

Epoch 00020 | Loss 0.4938 | Train Acc. 0.8223 |

Epoch 00025 | Loss 0.4635 | Train Acc. 0.8339 |

Epoch 00030 | Loss 0.4530 | Train Acc. 0.8397 |

Epoch 00035 | Loss 0.4287 | Train Acc. 0.8362 |

Epoch 00040 | Loss 0.4238 | Train Acc. 0.8409 |

Epoch 00045 | Loss 0.4059 | Train Acc. 0.8560 |

Epoch 00050 | Loss 0.3950 | Train Acc. 0.8571 |

Epoch 00055 | Loss 0.3900 | Train Acc. 0.8467 |

Epoch 00060 | Loss 0.3980 | Train Acc. 0.8537 |

Epoch 00065 | Loss 0.3663 | Train Acc. 0.8630 |

Epoch 00070 | Loss 0.3860 | Train Acc. 0.8490 |

Epoch 00075 | Loss 0.3664 | Train Acc. 0.8595 |

Epoch 00080 | Loss 0.3694 | Train Acc. 0.8571 |

Epoch 00085 | Loss 0.3674 | Train Acc. 0.8525 |

Epoch 00090 | Loss 0.3664 | Train Acc. 0.8641 |

Epoch 00095 | Loss 0.3644 | Train Acc. 0.8711 |

Epoch 00100 | Loss 0.3646 | Train Acc. 0.8618 |

Epoch 00105 | Loss 0.3534 | Train Acc. 0.8757 |

Epoch 00110 | Loss 0.3649 | Train Acc. 0.8676 |

Epoch 00115 | Loss 0.3421 | Train Acc. 0.8606 |

Epoch 00120 | Loss 0.3471 | Train Acc. 0.8664 |

Epoch 00125 | Loss 0.3407 | Train Acc. 0.8757 |

Epoch 00130 | Loss 0.3345 | Train Acc. 0.8757 |

Epoch 00135 | Loss 0.3616 | Train Acc. 0.8653 |

Epoch 00140 | Loss 0.3336 | Train Acc. 0.8711 |

Epoch 00145 | Loss 0.3346 | Train Acc. 0.8711 |

Epoch 00150 | Loss 0.3396 | Train Acc. 0.8757 |

Epoch 00155 | Loss 0.3324 | Train Acc. 0.8757 |

Epoch 00160 | Loss 0.3359 | Train Acc. 0.8641 |

Epoch 00165 | Loss 0.3265 | Train Acc. 0.8746 |

Epoch 00170 | Loss 0.3208 | Train Acc. 0.8757 |

Epoch 00175 | Loss 0.3210 | Train Acc. 0.8792 |

Epoch 00180 | Loss 0.3292 | Train Acc. 0.8722 |

Epoch 00185 | Loss 0.3312 | Train Acc. 0.8746 |

Epoch 00190 | Loss 0.3204 | Train Acc. 0.8780 |

Epoch 00195 | Loss 0.3248 | Train Acc. 0.8722 |

Epoch 00200 | Loss 0.3396 | Train Acc. 0.8688 |

Epoch 00205 | Loss 0.3278 | Train Acc. 0.8664 |

Epoch 00210 | Loss 0.3168 | Train Acc. 0.8676 |

Epoch 00215 | Loss 0.3130 | Train Acc. 0.8815 |

Epoch 00220 | Loss 0.3208 | Train Acc. 0.8722 |

Epoch 00225 | Loss 0.3175 | Train Acc. 0.8792 |

Epoch 00230 | Loss 0.3178 | Train Acc. 0.8757 |

Epoch 00235 | Loss 0.3188 | Train Acc. 0.8711 |

Epoch 00240 | Loss 0.3151 | Train Acc. 0.8792 |

Epoch 00245 | Loss 0.3219 | Train Acc. 0.8722 |

Epoch 00250 | Loss 0.3270 | Train Acc. 0.8757 |

Epoch 00255 | Loss 0.3143 | Train Acc. 0.8792 |

Epoch 00260 | Loss 0.3285 | Train Acc. 0.8699 |

Epoch 00265 | Loss 0.3191 | Train Acc. 0.8722 |

Epoch 00270 | Loss 0.3214 | Train Acc. 0.8815 |

Epoch 00275 | Loss 0.3212 | Train Acc. 0.8746 |

Epoch 00280 | Loss 0.3183 | Train Acc. 0.8804 |

Epoch 00285 | Loss 0.3188 | Train Acc. 0.8746 |

Epoch 00290 | Loss 0.3095 | Train Acc. 0.8769 |

Epoch 00295 | Loss 0.3193 | Train Acc. 0.8699 |

Epoch 00300 | Loss 0.3186 | Train Acc. 0.8746 |

Epoch 00305 | Loss 0.3340 | Train Acc. 0.8664 |

Epoch 00310 | Loss 0.3095 | Train Acc. 0.8815 |

Epoch 00315 | Loss 0.3080 | Train Acc. 0.8757 |

Epoch 00320 | Loss 0.3118 | Train Acc. 0.8711 |

Epoch 00325 | Loss 0.3165 | Train Acc. 0.8769 |

Epoch 00330 | Loss 0.3219 | Train Acc. 0.8734 |

Epoch 00335 | Loss 0.3163 | Train Acc. 0.8699 |

Epoch 00340 | Loss 0.3236 | Train Acc. 0.8746 |

Epoch 00345 | Loss 0.3120 | Train Acc. 0.8804 |

Epoch 00350 | Loss 0.3160 | Train Acc. 0.8722 |

Epoch 00355 | Loss 0.3106 | Train Acc. 0.8780 |

Epoch 00360 | Loss 0.3107 | Train Acc. 0.8862 |

Epoch 00365 | Loss 0.3190 | Train Acc. 0.8792 |

Epoch 00370 | Loss 0.3208 | Train Acc. 0.8757 |

Epoch 00375 | Loss 0.3180 | Train Acc. 0.8804 |

Epoch 00380 | Loss 0.3189 | Train Acc. 0.8815 |

Epoch 00385 | Loss 0.3269 | Train Acc. 0.8769 |

Epoch 00390 | Loss 0.3112 | Train Acc. 0.8862 |

Epoch 00395 | Loss 0.3149 | Train Acc. 0.8711 |

Epoch 00400 | Loss 0.3050 | Train Acc. 0.8920 |

Epoch 00405 | Loss 0.3147 | Train Acc. 0.8769 |

Epoch 00410 | Loss 0.3062 | Train Acc. 0.8699 |

Epoch 00415 | Loss 0.3158 | Train Acc. 0.8722 |

Epoch 00420 | Loss 0.3207 | Train Acc. 0.8746 |

Epoch 00425 | Loss 0.3097 | Train Acc. 0.8827 |

Epoch 00430 | Loss 0.3174 | Train Acc. 0.8815 |

Epoch 00435 | Loss 0.3114 | Train Acc. 0.8815 |

Epoch 00440 | Loss 0.3109 | Train Acc. 0.8734 |

Epoch 00445 | Loss 0.3128 | Train Acc. 0.8827 |

Epoch 00450 | Loss 0.3081 | Train Acc. 0.8804 |

Epoch 00455 | Loss 0.3205 | Train Acc. 0.8792 |

Epoch 00460 | Loss 0.3102 | Train Acc. 0.8839 |

Epoch 00465 | Loss 0.3206 | Train Acc. 0.8769 |

Epoch 00470 | Loss 0.3053 | Train Acc. 0.8827 |

Epoch 00475 | Loss 0.3134 | Train Acc. 0.8804 |

Epoch 00480 | Loss 0.3090 | Train Acc. 0.8839 |

Epoch 00485 | Loss 0.3284 | Train Acc. 0.8711 |

Epoch 00490 | Loss 0.3098 | Train Acc. 0.8827 |

Epoch 00495 | Loss 0.3173 | Train Acc. 0.8711 |

Epoch 00500 | Loss 0.3077 | Train Acc. 0.8839 |

Epoch 00505 | Loss 0.3137 | Train Acc. 0.8746 |

Early stopping! No improvement for 100 consecutive epochs.

<Figure size 640x480 with 0 Axes>

Fold : 2 | Test Accuracy = 0.8977 | F1 = 0.8707

Total = 42.4Gb Reserved = 0.7Gb Allocated = 0.3Gb

Clearing gpu memory

Total = 42.4Gb Reserved = 0.3Gb Allocated = 0.3Gb

GCN_MME(

(encoder_dims): ModuleList(

(0): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=464, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

(1): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=29995, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

)

(gnnlayers): ModuleList(

(0): GraphConv(in=64, out=32, normalization=both, activation=None)

(1): GraphConv(in=32, out=5, normalization=both, activation=None)

)

(batch_norms): ModuleList(

(0): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

Graph(num_nodes=1076, num_edges=18300,

ndata_schemes={'idx': Scheme(shape=(), dtype=torch.int64), 'label': Scheme(shape=(5,), dtype=torch.int64), 'feat': Scheme(shape=(30459,), dtype=torch.float32)}

edata_schemes={})

Epoch 00000 | Loss 1.9434 | Train Acc. 0.1417 |

Epoch 00005 | Loss 0.6182 | Train Acc. 0.8002 |

Epoch 00010 | Loss 0.5353 | Train Acc. 0.8165 |

Epoch 00015 | Loss 0.4779 | Train Acc. 0.8420 |

Epoch 00020 | Loss 0.4841 | Train Acc. 0.8293 |

Epoch 00025 | Loss 0.4298 | Train Acc. 0.8467 |

Epoch 00030 | Loss 0.4281 | Train Acc. 0.8479 |

Epoch 00035 | Loss 0.4288 | Train Acc. 0.8444 |

Epoch 00040 | Loss 0.3980 | Train Acc. 0.8630 |

Epoch 00045 | Loss 0.3977 | Train Acc. 0.8455 |

Epoch 00050 | Loss 0.3776 | Train Acc. 0.8595 |

Epoch 00055 | Loss 0.3804 | Train Acc. 0.8606 |

Epoch 00060 | Loss 0.3702 | Train Acc. 0.8688 |

Epoch 00065 | Loss 0.3633 | Train Acc. 0.8757 |

Epoch 00070 | Loss 0.3626 | Train Acc. 0.8711 |

Epoch 00075 | Loss 0.3530 | Train Acc. 0.8676 |

Epoch 00080 | Loss 0.3483 | Train Acc. 0.8688 |

Epoch 00085 | Loss 0.3513 | Train Acc. 0.8664 |

Epoch 00090 | Loss 0.3387 | Train Acc. 0.8757 |

Epoch 00095 | Loss 0.3492 | Train Acc. 0.8618 |

Epoch 00100 | Loss 0.3458 | Train Acc. 0.8722 |

Epoch 00105 | Loss 0.3259 | Train Acc. 0.8908 |

Epoch 00110 | Loss 0.3394 | Train Acc. 0.8630 |

Epoch 00115 | Loss 0.3386 | Train Acc. 0.8664 |

Epoch 00120 | Loss 0.3330 | Train Acc. 0.8641 |

Epoch 00125 | Loss 0.3270 | Train Acc. 0.8769 |

Epoch 00130 | Loss 0.3235 | Train Acc. 0.8769 |

Epoch 00135 | Loss 0.3309 | Train Acc. 0.8699 |

Epoch 00140 | Loss 0.3306 | Train Acc. 0.8676 |

Epoch 00145 | Loss 0.3167 | Train Acc. 0.8873 |

Epoch 00150 | Loss 0.3192 | Train Acc. 0.8862 |

Epoch 00155 | Loss 0.3240 | Train Acc. 0.8711 |

Epoch 00160 | Loss 0.3350 | Train Acc. 0.8734 |

Epoch 00165 | Loss 0.3325 | Train Acc. 0.8769 |

Epoch 00170 | Loss 0.3187 | Train Acc. 0.8757 |

Epoch 00175 | Loss 0.3140 | Train Acc. 0.8908 |

Epoch 00180 | Loss 0.3225 | Train Acc. 0.8746 |

Epoch 00185 | Loss 0.3165 | Train Acc. 0.8757 |

Epoch 00190 | Loss 0.3240 | Train Acc. 0.8850 |

Epoch 00195 | Loss 0.3102 | Train Acc. 0.8769 |

Epoch 00200 | Loss 0.3115 | Train Acc. 0.8769 |

Epoch 00205 | Loss 0.3145 | Train Acc. 0.8839 |

Epoch 00210 | Loss 0.3128 | Train Acc. 0.8815 |

Epoch 00215 | Loss 0.3166 | Train Acc. 0.8815 |

Epoch 00220 | Loss 0.3160 | Train Acc. 0.8780 |

Epoch 00225 | Loss 0.3236 | Train Acc. 0.8734 |

Epoch 00230 | Loss 0.3112 | Train Acc. 0.8804 |

Epoch 00235 | Loss 0.3137 | Train Acc. 0.8920 |

Epoch 00240 | Loss 0.3225 | Train Acc. 0.8769 |

Epoch 00245 | Loss 0.3056 | Train Acc. 0.8885 |

Epoch 00250 | Loss 0.3098 | Train Acc. 0.8804 |

Epoch 00255 | Loss 0.3089 | Train Acc. 0.8862 |

Epoch 00260 | Loss 0.3095 | Train Acc. 0.8804 |

Epoch 00265 | Loss 0.3105 | Train Acc. 0.8815 |

Epoch 00270 | Loss 0.3080 | Train Acc. 0.8850 |

Epoch 00275 | Loss 0.3028 | Train Acc. 0.8850 |

Epoch 00280 | Loss 0.3130 | Train Acc. 0.8815 |

Epoch 00285 | Loss 0.3149 | Train Acc. 0.8746 |

Epoch 00290 | Loss 0.3074 | Train Acc. 0.8746 |

Epoch 00295 | Loss 0.3089 | Train Acc. 0.8815 |

Epoch 00300 | Loss 0.3069 | Train Acc. 0.8757 |

Epoch 00305 | Loss 0.3198 | Train Acc. 0.8676 |

Epoch 00310 | Loss 0.3113 | Train Acc. 0.8885 |

Epoch 00315 | Loss 0.3056 | Train Acc. 0.8839 |

Epoch 00320 | Loss 0.3009 | Train Acc. 0.8815 |

Epoch 00325 | Loss 0.3087 | Train Acc. 0.8827 |

Epoch 00330 | Loss 0.3016 | Train Acc. 0.8862 |

Epoch 00335 | Loss 0.3083 | Train Acc. 0.8897 |

Epoch 00340 | Loss 0.3154 | Train Acc. 0.8815 |

Epoch 00345 | Loss 0.3095 | Train Acc. 0.8908 |

Epoch 00350 | Loss 0.3089 | Train Acc. 0.8757 |

Epoch 00355 | Loss 0.3095 | Train Acc. 0.8908 |

Epoch 00360 | Loss 0.3154 | Train Acc. 0.8815 |

Epoch 00365 | Loss 0.3283 | Train Acc. 0.8746 |

Epoch 00370 | Loss 0.3053 | Train Acc. 0.8862 |

Epoch 00375 | Loss 0.3203 | Train Acc. 0.8792 |

Epoch 00380 | Loss 0.3132 | Train Acc. 0.8827 |

Epoch 00385 | Loss 0.3035 | Train Acc. 0.8873 |

Epoch 00390 | Loss 0.3065 | Train Acc. 0.8873 |

Epoch 00395 | Loss 0.3115 | Train Acc. 0.8839 |

Epoch 00400 | Loss 0.3133 | Train Acc. 0.8827 |

Epoch 00405 | Loss 0.3043 | Train Acc. 0.8827 |

Epoch 00410 | Loss 0.3105 | Train Acc. 0.8862 |

Epoch 00415 | Loss 0.3057 | Train Acc. 0.8862 |

Epoch 00420 | Loss 0.3011 | Train Acc. 0.8955 |

Epoch 00425 | Loss 0.3044 | Train Acc. 0.8873 |

Epoch 00430 | Loss 0.3141 | Train Acc. 0.8769 |

Epoch 00435 | Loss 0.3039 | Train Acc. 0.8908 |

Epoch 00440 | Loss 0.3109 | Train Acc. 0.8885 |

Epoch 00445 | Loss 0.3065 | Train Acc. 0.8757 |

Epoch 00450 | Loss 0.3184 | Train Acc. 0.8815 |

Epoch 00455 | Loss 0.3055 | Train Acc. 0.8873 |

Epoch 00460 | Loss 0.3069 | Train Acc. 0.8943 |

Epoch 00465 | Loss 0.3113 | Train Acc. 0.8885 |

Epoch 00470 | Loss 0.3109 | Train Acc. 0.8908 |

Epoch 00475 | Loss 0.3105 | Train Acc. 0.8792 |

Epoch 00480 | Loss 0.3086 | Train Acc. 0.8873 |

Epoch 00485 | Loss 0.2957 | Train Acc. 0.8885 |

Epoch 00490 | Loss 0.3261 | Train Acc. 0.8769 |

Epoch 00495 | Loss 0.2943 | Train Acc. 0.8850 |

Epoch 00500 | Loss 0.3079 | Train Acc. 0.8873 |

Epoch 00505 | Loss 0.3112 | Train Acc. 0.8780 |

Epoch 00510 | Loss 0.3180 | Train Acc. 0.8769 |

Epoch 00515 | Loss 0.3021 | Train Acc. 0.8897 |

Epoch 00520 | Loss 0.3170 | Train Acc. 0.8862 |

Epoch 00525 | Loss 0.2976 | Train Acc. 0.8850 |

Epoch 00530 | Loss 0.3043 | Train Acc. 0.8862 |

Epoch 00535 | Loss 0.3051 | Train Acc. 0.8827 |

Epoch 00540 | Loss 0.3102 | Train Acc. 0.8815 |

Epoch 00545 | Loss 0.3036 | Train Acc. 0.8839 |

Epoch 00550 | Loss 0.3106 | Train Acc. 0.8827 |

Epoch 00555 | Loss 0.2998 | Train Acc. 0.8862 |

Epoch 00560 | Loss 0.3125 | Train Acc. 0.8873 |

Epoch 00565 | Loss 0.3081 | Train Acc. 0.8931 |

Epoch 00570 | Loss 0.3103 | Train Acc. 0.8746 |

Epoch 00575 | Loss 0.3031 | Train Acc. 0.8827 |

Epoch 00580 | Loss 0.3160 | Train Acc. 0.8780 |

Epoch 00585 | Loss 0.3121 | Train Acc. 0.8780 |

Epoch 00590 | Loss 0.3071 | Train Acc. 0.8885 |

Epoch 00595 | Loss 0.3088 | Train Acc. 0.8827 |

Early stopping! No improvement for 100 consecutive epochs.

<Figure size 640x480 with 0 Axes>

Fold : 3 | Test Accuracy = 0.8698 | F1 = 0.8476

Total = 42.4Gb Reserved = 0.7Gb Allocated = 0.4Gb

Clearing gpu memory

Total = 42.4Gb Reserved = 0.3Gb Allocated = 0.3Gb

GCN_MME(

(encoder_dims): ModuleList(

(0): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=464, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

(1): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=29995, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

)

(gnnlayers): ModuleList(

(0): GraphConv(in=64, out=32, normalization=both, activation=None)

(1): GraphConv(in=32, out=5, normalization=both, activation=None)

)

(batch_norms): ModuleList(

(0): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

Graph(num_nodes=1076, num_edges=18300,

ndata_schemes={'idx': Scheme(shape=(), dtype=torch.int64), 'label': Scheme(shape=(5,), dtype=torch.int64), 'feat': Scheme(shape=(30459,), dtype=torch.float32)}

edata_schemes={})

Epoch 00000 | Loss 1.5269 | Train Acc. 0.3391 |

Epoch 00005 | Loss 0.6190 | Train Acc. 0.7851 |

Epoch 00010 | Loss 0.5343 | Train Acc. 0.8235 |

Epoch 00015 | Loss 0.4570 | Train Acc. 0.8444 |

Epoch 00020 | Loss 0.4418 | Train Acc. 0.8316 |

Epoch 00025 | Loss 0.4451 | Train Acc. 0.8409 |

Epoch 00030 | Loss 0.3949 | Train Acc. 0.8490 |

Epoch 00035 | Loss 0.4243 | Train Acc. 0.8293 |

Epoch 00040 | Loss 0.3852 | Train Acc. 0.8571 |

Epoch 00045 | Loss 0.3810 | Train Acc. 0.8571 |

Epoch 00050 | Loss 0.3677 | Train Acc. 0.8595 |

Epoch 00055 | Loss 0.3736 | Train Acc. 0.8420 |

Epoch 00060 | Loss 0.3609 | Train Acc. 0.8664 |

Epoch 00065 | Loss 0.3693 | Train Acc. 0.8571 |

Epoch 00070 | Loss 0.3441 | Train Acc. 0.8630 |

Epoch 00075 | Loss 0.3487 | Train Acc. 0.8606 |

Epoch 00080 | Loss 0.3454 | Train Acc. 0.8746 |

Epoch 00085 | Loss 0.3475 | Train Acc. 0.8595 |

Epoch 00090 | Loss 0.3534 | Train Acc. 0.8560 |

Epoch 00095 | Loss 0.3410 | Train Acc. 0.8676 |

Epoch 00100 | Loss 0.3385 | Train Acc. 0.8583 |

Epoch 00105 | Loss 0.3385 | Train Acc. 0.8630 |

Epoch 00110 | Loss 0.3487 | Train Acc. 0.8560 |

Epoch 00115 | Loss 0.3323 | Train Acc. 0.8676 |

Epoch 00120 | Loss 0.3224 | Train Acc. 0.8711 |

Epoch 00125 | Loss 0.3254 | Train Acc. 0.8827 |

Epoch 00130 | Loss 0.3139 | Train Acc. 0.8711 |

Epoch 00135 | Loss 0.3130 | Train Acc. 0.8815 |

Epoch 00140 | Loss 0.3299 | Train Acc. 0.8664 |

Epoch 00145 | Loss 0.3339 | Train Acc. 0.8769 |

Epoch 00150 | Loss 0.3237 | Train Acc. 0.8630 |

Epoch 00155 | Loss 0.3161 | Train Acc. 0.8850 |

Epoch 00160 | Loss 0.3302 | Train Acc. 0.8746 |

Epoch 00165 | Loss 0.3203 | Train Acc. 0.8804 |

Epoch 00170 | Loss 0.3093 | Train Acc. 0.8711 |

Epoch 00175 | Loss 0.3069 | Train Acc. 0.8850 |

Epoch 00180 | Loss 0.3145 | Train Acc. 0.8757 |

Epoch 00185 | Loss 0.3201 | Train Acc. 0.8746 |

Epoch 00190 | Loss 0.3033 | Train Acc. 0.8885 |

Epoch 00195 | Loss 0.3247 | Train Acc. 0.8676 |

Epoch 00200 | Loss 0.3114 | Train Acc. 0.8815 |

Epoch 00205 | Loss 0.3162 | Train Acc. 0.8688 |

Epoch 00210 | Loss 0.2951 | Train Acc. 0.8839 |

Epoch 00215 | Loss 0.3066 | Train Acc. 0.8815 |

Epoch 00220 | Loss 0.3059 | Train Acc. 0.8769 |

Epoch 00225 | Loss 0.2942 | Train Acc. 0.8897 |

Epoch 00230 | Loss 0.3100 | Train Acc. 0.8792 |

Epoch 00235 | Loss 0.3103 | Train Acc. 0.8827 |

Epoch 00240 | Loss 0.3072 | Train Acc. 0.8780 |

Epoch 00245 | Loss 0.2909 | Train Acc. 0.8780 |

Epoch 00250 | Loss 0.3060 | Train Acc. 0.8780 |

Epoch 00255 | Loss 0.2871 | Train Acc. 0.8873 |

Epoch 00260 | Loss 0.3132 | Train Acc. 0.8757 |

Epoch 00265 | Loss 0.3033 | Train Acc. 0.8839 |

Epoch 00270 | Loss 0.3086 | Train Acc. 0.8815 |

Epoch 00275 | Loss 0.3066 | Train Acc. 0.8746 |

Epoch 00280 | Loss 0.3085 | Train Acc. 0.8827 |

Epoch 00285 | Loss 0.3080 | Train Acc. 0.8711 |

Epoch 00290 | Loss 0.3092 | Train Acc. 0.8815 |

Epoch 00295 | Loss 0.2998 | Train Acc. 0.8769 |

Epoch 00300 | Loss 0.3117 | Train Acc. 0.8757 |

Epoch 00305 | Loss 0.2973 | Train Acc. 0.8850 |

Epoch 00310 | Loss 0.3056 | Train Acc. 0.8792 |

Epoch 00315 | Loss 0.3067 | Train Acc. 0.8734 |

Epoch 00320 | Loss 0.3091 | Train Acc. 0.8873 |

Epoch 00325 | Loss 0.2984 | Train Acc. 0.8827 |

Epoch 00330 | Loss 0.3071 | Train Acc. 0.8722 |

Epoch 00335 | Loss 0.3097 | Train Acc. 0.8792 |

Epoch 00340 | Loss 0.3100 | Train Acc. 0.8746 |

Epoch 00345 | Loss 0.2984 | Train Acc. 0.8815 |

Epoch 00350 | Loss 0.3040 | Train Acc. 0.8827 |

Epoch 00355 | Loss 0.3046 | Train Acc. 0.8792 |

Early stopping! No improvement for 100 consecutive epochs.

<Figure size 640x480 with 0 Axes>

Fold : 4 | Test Accuracy = 0.8651 | F1 = 0.8348

Total = 42.4Gb Reserved = 0.7Gb Allocated = 0.4Gb

Clearing gpu memory

Total = 42.4Gb Reserved = 0.3Gb Allocated = 0.3Gb

GCN_MME(

(encoder_dims): ModuleList(

(0): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=464, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

(1): Encoder(

(encoder): ModuleList(

(0): Linear(in_features=29995, out_features=500, bias=True)

(1): Linear(in_features=500, out_features=16, bias=True)

)

(norm): ModuleList(

(0): BatchNorm1d(500, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): BatchNorm1d(16, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(decoder): Sequential(

(0): Linear(in_features=16, out_features=64, bias=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

)

(gnnlayers): ModuleList(

(0): GraphConv(in=64, out=32, normalization=both, activation=None)

(1): GraphConv(in=32, out=5, normalization=both, activation=None)

)

(batch_norms): ModuleList(

(0): BatchNorm1d(32, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(drop): Dropout(p=0.5, inplace=False)

)

Graph(num_nodes=1076, num_edges=18300,

ndata_schemes={'idx': Scheme(shape=(), dtype=torch.int64), 'label': Scheme(shape=(5,), dtype=torch.int64), 'feat': Scheme(shape=(30459,), dtype=torch.float32)}

edata_schemes={})

Epoch 00000 | Loss 1.5689 | Train Acc. 0.3415 |

Epoch 00005 | Loss 0.6583 | Train Acc. 0.8142 |

Epoch 00010 | Loss 0.5338 | Train Acc. 0.8444 |

Epoch 00015 | Loss 0.4778 | Train Acc. 0.8490 |

Epoch 00020 | Loss 0.4694 | Train Acc. 0.8525 |

Epoch 00025 | Loss 0.4469 | Train Acc. 0.8560 |

Epoch 00030 | Loss 0.4383 | Train Acc. 0.8502 |

Epoch 00035 | Loss 0.4002 | Train Acc. 0.8560 |

Epoch 00040 | Loss 0.4053 | Train Acc. 0.8525 |

Epoch 00045 | Loss 0.3930 | Train Acc. 0.8571 |

Epoch 00050 | Loss 0.3750 | Train Acc. 0.8688 |

Epoch 00055 | Loss 0.3744 | Train Acc. 0.8711 |

Epoch 00060 | Loss 0.3633 | Train Acc. 0.8618 |

Epoch 00065 | Loss 0.3761 | Train Acc. 0.8630 |

Epoch 00070 | Loss 0.3556 | Train Acc. 0.8734 |

Epoch 00075 | Loss 0.3472 | Train Acc. 0.8676 |

Epoch 00080 | Loss 0.3488 | Train Acc. 0.8734 |

Epoch 00085 | Loss 0.3398 | Train Acc. 0.8815 |

Epoch 00090 | Loss 0.3493 | Train Acc. 0.8804 |

Epoch 00095 | Loss 0.3445 | Train Acc. 0.8734 |

Epoch 00100 | Loss 0.3432 | Train Acc. 0.8711 |

Epoch 00105 | Loss 0.3304 | Train Acc. 0.8722 |

Epoch 00110 | Loss 0.3349 | Train Acc. 0.8839 |

Epoch 00115 | Loss 0.3266 | Train Acc. 0.8827 |

Epoch 00120 | Loss 0.3306 | Train Acc. 0.8746 |

Epoch 00125 | Loss 0.3171 | Train Acc. 0.8746 |

Epoch 00130 | Loss 0.3261 | Train Acc. 0.8722 |

Epoch 00135 | Loss 0.3184 | Train Acc. 0.8862 |

Epoch 00140 | Loss 0.3270 | Train Acc. 0.8792 |

Epoch 00145 | Loss 0.3261 | Train Acc. 0.8746 |

Epoch 00150 | Loss 0.3249 | Train Acc. 0.8862 |

Epoch 00155 | Loss 0.3334 | Train Acc. 0.8676 |

Epoch 00160 | Loss 0.3206 | Train Acc. 0.8873 |

Epoch 00165 | Loss 0.3124 | Train Acc. 0.8885 |

Epoch 00170 | Loss 0.3266 | Train Acc. 0.8815 |

Epoch 00175 | Loss 0.3101 | Train Acc. 0.8792 |

Epoch 00180 | Loss 0.3118 | Train Acc. 0.8804 |

Epoch 00185 | Loss 0.3157 | Train Acc. 0.8827 |

Epoch 00190 | Loss 0.3239 | Train Acc. 0.8815 |

Epoch 00195 | Loss 0.3030 | Train Acc. 0.8873 |

Epoch 00200 | Loss 0.3027 | Train Acc. 0.8827 |

Epoch 00205 | Loss 0.3088 | Train Acc. 0.8780 |

Epoch 00210 | Loss 0.3092 | Train Acc. 0.8792 |

Epoch 00215 | Loss 0.3022 | Train Acc. 0.8908 |

Epoch 00220 | Loss 0.3073 | Train Acc. 0.8827 |

Epoch 00225 | Loss 0.3094 | Train Acc. 0.8827 |

Epoch 00230 | Loss 0.3132 | Train Acc. 0.8746 |

Epoch 00235 | Loss 0.3103 | Train Acc. 0.8804 |

Epoch 00240 | Loss 0.3128 | Train Acc. 0.8757 |

Epoch 00245 | Loss 0.2985 | Train Acc. 0.8873 |

Epoch 00250 | Loss 0.3025 | Train Acc. 0.8827 |

Epoch 00255 | Loss 0.3094 | Train Acc. 0.8815 |

Epoch 00260 | Loss 0.3106 | Train Acc. 0.8850 |

Epoch 00265 | Loss 0.3019 | Train Acc. 0.8931 |

Epoch 00270 | Loss 0.2954 | Train Acc. 0.8897 |

Epoch 00275 | Loss 0.3008 | Train Acc. 0.8862 |

Epoch 00280 | Loss 0.2954 | Train Acc. 0.8885 |

Epoch 00285 | Loss 0.3055 | Train Acc. 0.8862 |

Epoch 00290 | Loss 0.2998 | Train Acc. 0.8827 |

Epoch 00295 | Loss 0.3095 | Train Acc. 0.8897 |

Epoch 00300 | Loss 0.2930 | Train Acc. 0.8862 |

Epoch 00305 | Loss 0.2989 | Train Acc. 0.8920 |

Epoch 00310 | Loss 0.3060 | Train Acc. 0.8850 |

Epoch 00315 | Loss 0.3008 | Train Acc. 0.8897 |

Early stopping! No improvement for 100 consecutive epochs.

<Figure size 640x480 with 0 Axes>

Fold : 5 | Test Accuracy = 0.8372 | F1 = 0.8257

Total = 42.4Gb Reserved = 0.7Gb Allocated = 0.4Gb

Clearing gpu memory

Total = 42.4Gb Reserved = 0.3Gb Allocated = 0.3Gb

5 Fold Cross Validation Accuracy = 86.71 ± 1.92

5 Fold Cross Validation F1 = 84.37 ± 1.52

# test the model

print("Testing...")

acc = layerwise_infer(

device, g, np.arange(len(g.nodes())), best_model, batch_size=4096

)

print("Test Accuracy {:.4f}".format(acc.item()))

Testing...

100%|██████████| 1/1 [00:00<00:00, 185.19it/s]

100%|██████████| 1/1 [00:00<00:00, 267.68it/s]

Test Accuracy 0.8866

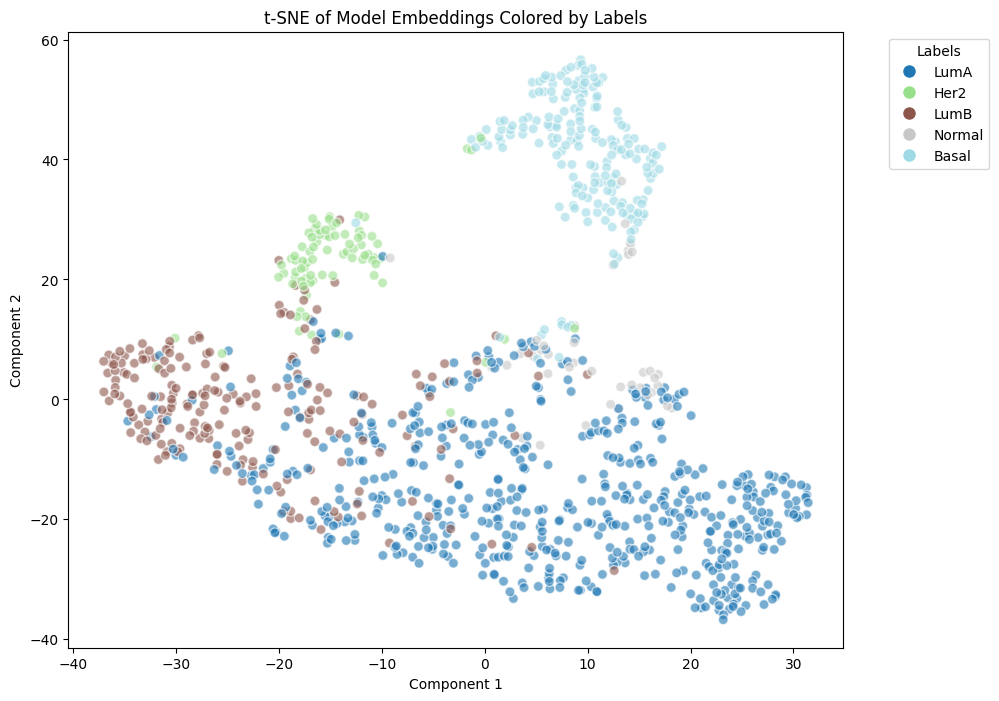

best_model.eval()

with torch.no_grad():

emb = best_model.embedding_extraction(

g, g.ndata['feat'] ,device, 4096

) # pred in buffer_device

tsne_embedding_plot(emb.detach().cpu().numpy() , meta)

100%|██████████| 1/1 [00:00<00:00, 310.41it/s]